How SEOs Can Use Python to Automate Lighthouse Reports

Google’s web page scanner Lighthouse has been a fixture as one of the most important tools to use when evaluating a web page. This scanner at a high level measures your page’s performance, SEO, accessibility, and best practices. At a deeper level, it gives more granular metrics for each of those categories and displays recommendations. Most SEOs are very familiar with running Lighthouse within Google Chrome’s DevTools. Using Lighthouse within the browser is very easy and handy, but it’s still manual and difficult to scale. What if you want to run Lighthouse on multiple pages on a daily basis? Python as usual comes to the rescue. This tutorial will offer the bare bones needed to set up your automated Lighthouse scanning. From this tutorial, it will be clear and easy to extend it for your complete purposes.

Table of Contents

Key Points

- Google‘s web page scanner Lighthouse measures page performance, SEO, accessibility, and best practices

- Python can be used to automate the use of Lighthouse

- Requirements and Assumptions include Python 3, access to Linux or Google Colab, and Lighthouse 6.4.1

- Install the Lighthouse CLI and import the required modules

- Create an empty dataframe and support variables

- Loop through the list of URLs and run Lighthouse

- Process the JSON report, open the file, and grab the high–level ratings

- Add ratings to the dataframe, export to CSV, and automate the scan with crontab

Requirements and Assumptions

- Python 3 is installed and basic Python syntax understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab. I don’t recommend Google Colab for this as the performance is not great.

- This script has been tested on Lighthouse 6.4.1 which is current as of this tutorial’s publishing date.

Starting the Script

In the first step, we install the lighthouse CLI from you which is easy to install using the node package manager. Be sure to read the documentation and consider the options available. An alternative to running Lighthouse locally is to use the Page Speed Insights API. If you are running from Google Colab, put an exclamation point at the beginning, otherwise enter the following in your terminal:

npm install -g lighthouse

Next, we import our required modules.

- json: to process the JSON output from Lighthouse

- os: to execute the local Lighthouse CLI

- pandas: for storing the results and exporting to CSV

- datatime: used in naming the JSON file

import json import os import pandas as pd from datetime import datetime

Create Dataframe

We’re going to store each URL’s high-level ratings into a dataframe. Let’s set up that empty dataframe to use later. Note, there are dozens of metrics you can pull from these reports. Open the JSON output file once you run Lighthouse to see the possibilities and then add to the dataframe column below and make the necessary edits when we grab data from the JSON file.

df = pd.DataFrame([], columns=['URL','SEO','Accessibility','Performance','Best Practices'])

Create support variables

Now we set up a few easy variables we’ll use throughout. We’ll use the name and getdate for naming the output file, and the URL list is what we’re going to loop through and run Lighthouse on. You have two options for pushing the script to your list of URLs to scan. You can either use the code and put them in a list or you can import from a CSV, often a Screaming Frog or similar crawl file.

name = "RocketClicks"

getdate = datetime.now().strftime("%m-%d-%y")

urls = ["https://www.rocketclicks.com","https://www.rocketclicks.com/seo/","https://www.rocketclicks.com/ppc/"]

Use this code below if you are importing from a crawl file. Change YOUR_CRAWL_CSV to the path/name of your crawl CSV file. Then we convert the dataframe to a Python list.

df_urls = pd.read_csv("YOUR_CRAWL_CSV.csv")[["Address"]]

urls = df_urls.values.tolist()

Run Lighthouse

Now it’s time to loop through that list of URLs and run Lighthouse! We’ll use the OS Python module to execute Lighthouse via CLI. Be sure to check the docs for details on all the options available. Also, change the output path to your local environment.

for url in urls:

stream = os.popen('lighthouse --quiet --no-update-notifier --no-enable-error-reporting --output=json --output-path=YOUR_LOCAL_PATH'+name+'_'+getdate+'.report.json --chrome-flags="--headless" ' + url)

Due to Python executing an application outside the script we need to pause the script and wait for Lighthouse to finish. I’ve found 2 minutes, or 120 seconds works for most pages. Tweak as needed if you get an error that the JSON output file doesn’t exist. The alternative to a script pause is to write a loop looking for the output file and having it loop back endlessly until the file exists and then the script continues. Once the pause is over and Lighthouse is likely finished we build the full path to the file so we can process it in the next snippet. Be sure to change “YOUR_LOCAL_PATH”.

time.sleep(120)

print("Report complete for: " + url)

json_filename = 'YOUR_LOCAL_PATH' + name + '_' + getdate + '.report.json'

Process Report

Now let’s open that JSON report file and start processing it.

with open(json_filename) as json_data:

loaded_json = json.load(json_data)

As mentioned earlier, there is a ton of data in this report file and I encourage you to go through it and pick out the things you want to store. For this tutorial, we’re just going to grab the high-level ratings for each of the 4 main categories. Remember these scores are out of 100. We multiply by 100 here because Lighthouse records these scores as floats from .00 to 1. 1 being 100% score.

seo = str(round(loaded_json["categories"]["seo"]["score"] * 100))

accessibility = str(round(loaded_json["categories"]["accessibility"]["score"] * 100))

performance = str(round(loaded_json["categories"]["performance"]["score"] * 100))

best_practices = str(round(loaded_json["categories"]["best-practices"]["score"] * 100))

Add Data to Dataframe

Now we take those high-level ratings and put them in a dictionary list and then append them to the dataframe. Each URL will be added to this dataframe. After this, it loops back to the next URL if there is one.

dict = {"URL":url,"SEO":seo,"Accessibility":accessibility,"Performance":performance,"Best Practices":best_practices}

df = df.append(dict, ignore_index=True).sort_values(by='SEO', ascending=False)

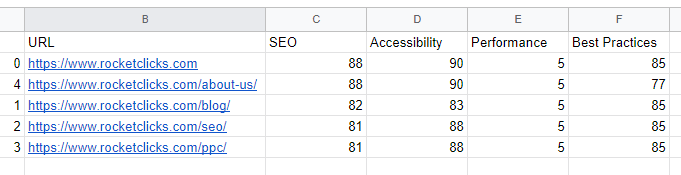

Finally, we have all our ratings in the dataframe. From here you can on your own do more manipulation, pipe into another script, or store in a database. In this tutorial, we’re going to simply export the data to a CSV file to be opened in say Google Sheets or Excel. Be sure to replace “SAVE_PATH”.

df.to_csv(SAVE_PATH/'lighthouse_' + name + '_' + getdate + '.csv') print(df)

Output

Automating the Scan

If your Lighthouse script is working well when you run it manually, it’s time to automate it. Luckily, Linux already supplies us with a solution by using the crontab. The crontab stores entries of scripts where you can dictate when to execute them (like a scheduler). You have lots of flexibility with how you schedule your script (any time of day, day of the week, day of the month, etc.).

But first, if you are going this route you should add a shebang to the very top of your script, it tells Linux to run the script using Python3:

#!/usr/bin/python3

Now back to the crontab! To open it and add entries to the crontab, run this command:

crontab -e

It will likely open up the crontab file in vi editor. On a blank line at the bottom of the file, type the code below. This code will run the script at midnight every Sunday. To change the time to something else, use this cronjob time editor. Customize with your path to the script.

0 0 * * SUN /usr/bin/python3 PATH_TO_SCRIPT/filename.py

If you want to create a log file to record each time the script ran, you can use this instead. Customize with your path to the script.

0 0 * * SUN /usr/bin/python3 PATH_TO_SCRIPT/filename.py > PATH_TO_FILE/FILENAME.log 2>&1

Save the crontab file and you’re good to go! Just note, your computer needs to be on at the time the cronjob is set to run.

Conclusion

Lighthouse has become a standard tool for SEOs to understand their pages well being. It’s time to level up our usage of Lighthouse and move beyond DevTools. Automation and granular customization using Python is one great way to achieve that. Level up this script by inserting data into a database or use Google Sheets API to add results to an existing sheet! Please follow me on Twitter for feedback and to showcase interesting ways to extend the script. Enjoy!

Looking for something more comprehensive? See Hamlet Batista’s BrightonSEO slides on Automating Lighthouse on a big scale!

Google Lighthouse and Python FAQ

- Evaluate Subreddit Posts in Bulk Using GPT4 Prompting - December 12, 2024

- Calculate Similarity Between Article Elements Using spaCy - November 13, 2024

- Audit URLs for SEO Using ahrefs Backlink API Data - November 11, 2024