Viewing cached links in Google is often used by SEOs as a troubleshooting or information recovery method. Google caches some of the web pages it crawls and creates a type of snapshot of that page at the time of the crawl. You’ll often notice some resources or images aren’t rendered so it’s rarely a perfect copy. It’s still useful to see what content is being cached and if something is missing finding out why. There is also a correlation between how often Google caches a page and its perceived importance. High-value pages are often cached daily whereas lesser-value pages can go weeks or months without a new cache date. Below I’ll show you how to scrape the cache date for a set of URLs so you can do the analysis yourself.

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab

Starting the Script

First, we install the fake_useragent module to use in our call header. This can help ward off light abuse detection. Google is for good reason very sensitive to non-human requests. If you find yourself blocked you can try using the module proxy-requests, but I didn’t have much success with it. Many of them were blocked already and the module too forever to run. The most successful tactic I’ve found is call delays. Note if you are using Google Colab, put an exclamation mark at the beginning of pip3, so !pip3.

pip3 install fake_useragent

Next, we import our modules we will use.

- requests will be used to request the Google cache page.

- re is for using the regular expression to grab the date from the cache page.

- fake_useragent is to generate the random user-agent

- numpy is used to select a random number from a list for script delay

- time will be used to cause the script delay

- pandas if you have a CSV file of URLs and for storing the scrape results

import requests import re from fake_useragent import UserAgent import numpy as np import time import pandas as pd

Build the URL List

Let’s build our URL list for the pages we want to check their cache dates.

urls = ['https://www.rocketclicks.com/seo/','https://www.rocketclicks.com/ppc/','https://www.rocketclicks.com/about-us/']

If you have a CSV file of URLs you can use the code below instead. Just modify to use the csv path and the column name where the URLs are stored. We load the CSV into a pandas dataframe and then convert the URL/address column to a list.

loadurls = pd.read_csv("PATH_TO_FILE")

urls = loadurls['COLUMN_NAME_WITH_URLS'].tolist()

Build Loop Counter and Pandas Dataframe

Now later on we’re going to set a script delay between calls to Google. So we don’t end up with an extra delay at the end of the script. Note, you could also move the delay we’ll build later on to the top of the for loop we’ll soon build, but this seems more fun to do. For this, we’ll build a counter variable and find the total number of URLs in the list.

After that, we build our pandas dataframe container where we will store the scrape results for each URL. It has two simple columns, URL and Cache Date.

counter = 0

urlnum = len(urls)

d = {'URL': [], 'Cache Date': []}

df = pd.DataFrame(data=d)

Begin Processing URLs

Next let’s start that loop to start processing the list of URLs. We’re going to use the cache: search operator and for a clean search we’ll strip anything before the root domain.

for url in urls:

urlform = url.replace('https://','')

urlform = urlform.replace('http://','')

urlform = urlform.replace('www.','')

Build the Cache Query

Now we can build the query using the cache: operator and the URL we just cleaned. Here we also build the delay list. This list contains numbers from 10-20 in increments of 1 and represents the seconds of delay between requests. I found this to be the most successful method of avoiding Google blocking your request. Anything quicker and Google starts blocking. We’ll use this list with numpy to randomize our delay using that list. Lastly, we build the full query URL we are going to request. I’ve just started learning about “f-strings“ so I wanted to use them in this script a bit. It’s just a modern method for inserting variables into strings.

query = "cache:"+urlform

delays = [*range(10, 21, 1)]

queryurl = f"https://google.com/search?q={query}"

Request Google Cache’d Page

Next, we will build our fake user agent and assign it to the header variable and make our request to Google. Fingers crossed!

ua = UserAgent()

header = {"user-agent": ua.chrome}

resp = requests.get(queryurl, headers=header,verify=True)

print(urlform)

Process Cached Paged

The request has been made, let’s check the response. If Google returns a 200 status code you are all good! If not, you may be blocked. Most times it’s temporary and clears after an hour or so. If the request is successful we can use RegEx to grab the cache date that is just text on the page. Let me break down the RegEx for those who aren’t completely comfortable with it.

The cache date is in this format: Sep 12, 2020

[a-zA-z]{3}\s[0-9]{1,2},\s[0-9]{4}

[a-zA-z]{3} matches as 3 non-case sensitive letters

\s[0-9]{1,2} matches a space then between 1-2 numbers

,\s matches a comma then a space

[0-9]{4} matches 4 numbers

After matching the cache date we create a dictionary object using the URL and cache date. Then we append it to the dataframe we created earlier. Each URL will be added to the dataframe this way.

if resp.status_code == 200:

getcache = re.search("[a-zA-z]{3}\s[0-9]{1,2},\s[0-9]{4}",resp.text)

g_cache = getcache.group(0)

newdate = {"URL":urlform,"Cache Date":g_cache}

df = df.append(newdate, ignore_index=True)

else:

print("Google may have blocked you, try again in an hour")

Set Script Delay and Print Dataframe

Finally, we update our counter and check if there are any more URLs in the list. If there is it’s time to set that delay. We use the numpy module to randomly select a number in that delay list we create earlier and then use that in our sleep() function. This will pause the script for that random amount of time. This helps confuse Google and prevents them from detecting patterns in request time. aka, humans don’t make 100 searches at exactly .1s intervals. At last, we display the cache date information we’ve been collecting.

counter += 1

if counter != urlnum:

delay = np.random.choice(delays)

#print("sleeping for "+str(delay)+" seconds" + "\n")

time.sleep(delay)

df

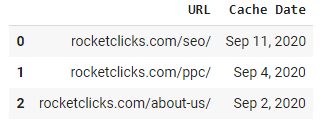

Example Output

Conclusion

You can see how easy it is to grab cache dates for your URLs. The biggest challenge is keeping Google off your back and finding ways to prevent getting temporarily blocked. From here you can store this information in a database, use it in reporting, or insert the data into another script. Enjoy! Don’t forget to follow me and let me know how you are using this script and extending it.

Google Cache Date FAQ

How can SEOs retrieve the Google Cache date for URLs using Python?

Leverage Python scripts to automate the process of querying Google’s cache and extracting the cache date for specific URLs.

Is authentication required for accessing Google’s cache date with Python?

No authentication is typically required for retrieving the Google Cache date using Python, as it involves scraping publicly available information.

What Python libraries are commonly used for extracting data from web pages, including cache dates?

Beautiful Soup and requests are commonly used Python libraries for web scraping, enabling the extraction of relevant information, including Google Cache dates.

Can Python scripts be used to retrieve cache dates for multiple URLs in a batch process?

Yes, Python scripts can be designed to iterate through a list of URLs, automating the process of retrieving Google Cache dates for multiple URLs.

Where can I find examples and documentation for using Python to retrieve Google Cache dates for URLs?

Explore online resources, tutorials, and documentation related to web scraping with Python, specifically focusing on extracting data, such as cache dates, from Google’s cache pages.

- Build a Custom Named Entity Visualizer with Google NLP - June 19, 2024

- Storing CrUX CWV Data for URLs Using Python for SEOs - January 20, 2024

- Scraping YouTube Video Page Metadata with Python for SEO - January 4, 2024