One of SEO’s oldest and still best ways to understand keywords and how Google treats them is to study the search engine result pages. Often times when doing keyword research we’ll end up with a large list of keywords. We’re then faced with validating and cleaning the list of prospects. We care about cleaning because although they may all look like unique opportunities, oftentimes they may be duplicative, synonymous, or topically similar enough that we can collapse one or more into each other to avoid cannibalization.

On the contrary, we can also use this understanding as a way to understand which words we may want to include in whatever pages we’re researching. Lastly, this can be used as the beginning of clustering keywords via their similarity to each other. However, there will need to be some additional logic added in for that to be useful.

Over the past couple of years, SERP comparison tools have started to catch on. Recently ahrefs released a SERP comparison tool and Keyword Insights now allows for a deep SERP analysis of 3 keywords at a time. These are sophisticated and wonderful SEO tools, but what if we could build our own SERP comparison tool that could compare more than 3 keywords? Turns out we can and very easily! So let’s start that framework and you can build into it a lot more logic and sophistication for your needs.

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax is understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab

- SERPApi or similar service like Value SERP (will need to modify API call and response handling if not SERPApi)

- Be careful with copying the code as indents are not always preserved well

Install Modules

The two modules that likely need to be installed for your environment are the SERPapi module vaguely called google-search-results and tldextract. If you are in a notebook don’t forget the exclamation mark in front.

pip3 install google-search-results

pip3 install tldextract

Import Modules

- requests: for calling both APIs

- pandas: storing the result data

- serpapi: interfacing with the serpapi api

- difflab: for calculating percentage difference comparing lists

- tldextract: easy extraction of domains from links

- seaborn: a little pandas table conditional formatting fun

import pandas as pd import requests from serpapi import GoogleSearch import difflib import tldextract import seaborn as sns

Calculate SERP Difference Percentages

Here we create the first of 3 functions that the main script will execute. This function will take the list of domains or URLs in the SERP for each keyword and compare each to each other, but not to itself. For each comparison, we send the two keyword result domain/URL lists into the difflib module. It returns a difference ratio for those two lists. Order in those lists does not matter. Once a keyword SERP result set has been compared with every other result set in the group, the difference float ratios are averaged, rounded, and recorded. These numbers are then multiplied by 100 to get the integer percentage as the final number and returned back to the main script (if half of the domains are different on average across the comparisons the returned number for that keyword will be 50%).

def get_keyword_serp_diffs(serp_comp):

diffs = []

keyword_diffs = []

serp_comp = serp_comp

for x in serp_comp:

diffs = []

for y in serp_comp:

if x != y:

sm=difflib.SequenceMatcher(None,x,y)

diffs.append(sm.ratio())

try:

keyword_diffs.append(round(sum(diffs)/len(diffs),2))

except:

keyword_diffs.append(1)

keyword_diffs = [int(x*100) for x in keyword_diffs]

return keyword_diffs

Extract Domains From SERP

Now that we have our search results JSON from the API we need to extract the domains from the links. That is the only part we care about, just that list, not even the rankings. We use the tldextract module to assist in easily extracting the domain from the entire link. If you want a more granular comparison, you can alter this snippet to include the entire URL or the domain + path. This will give a much more accurate comparison result for keywords that are very close to each other in meaning. So you must choose, is a comparison of similar domains good enough, or do you need exact URL comparisons? In the end, these domains or URLs are put in a list and sent back to the main script.

def get_serp_comp(results):

serp_comp = []

for x in results["organic_results"]:

ext = tldextract.extract(x["link"])

domain = ext.domain + '.' + ext.suffix

serp_comp.append(domain)

return serp_comp

Call SERP API

Now we create the first function which is to call SERPAPI and retrieve the SERPS JSON for the given keyword in the list generated. There are several parameters here that you may want to adjust depending on your language and location preferences. You may also choose a greater number of organic results for a larger sample. I choose to only compare the first page, so 8-9 results. There are many more parameters available to use in their documentation.

def serp(api_key,query):

params = {

"q": query,

"location": "United States",

"hl": "en",

"gl": "us",

"google_domain": "google.com",

"device": "desktop",

"num": "9",

"api_key": api_key}

search = GoogleSearch(params)

results = search.get_dict()

return results

Loop Though Keywords and Run Functions

Now we construct the actual beginning of the script. We have our keywords list up top which again I’d recommend limiting to 20 or fewer keywords otherwise the meaning starts to get lost depending on how similar or different the keywords are that you put in the list. You should always be comparison topically similar keywords.

Once we have our lists and api_key for SERP API added we can loop through the keyword list. We then execute the SERP API function to get the SERP results. Then we feed those results into the next function that extracts the organic domains or URLs. Then we take those lists and feed them into the SERP comparison function that calculates the difference between them and returns the score. The last part is some list comprehension to flatten the list and the list is returned as a list of lists when we need it flat to inject into the dataframe to display.

keywords = ['International Business Machines Corporation','IBM','big blue','International Business Machines','Watson'] serp_comp_keyword_list = [] serp_comp_list = [] api_key = "" for x in keywords: results = serp(api_key,x) serp_comp = get_serp_comp(results) serp_comp_list.append(serp_comp) serp_comp_keyword = get_keyword_serp_diffs(serp_comp_list) serp_comp_keyword_list.append(serp_comp_keyword) serp_comp_keyword_list = [element for sublist in serp_comp_keyword_list for element in sublist]

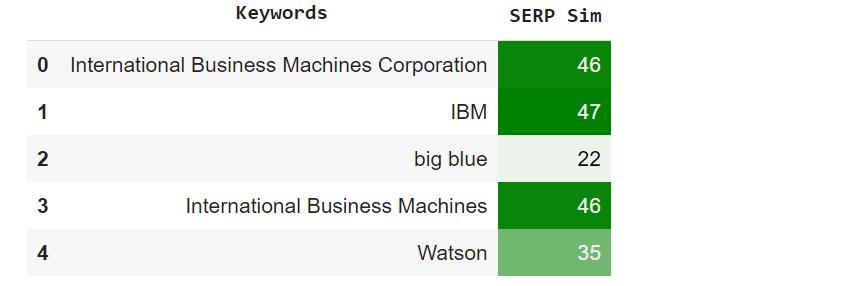

Inject Data into Dataframe and Display

The last part is to create the Pandas dataframe with two simple columns and inject the keywords and serp_comp_keyword_list lists respectively into those columns. We’ll apply a little seaborn color mapping for easy identification of high-similarity keywords according to the SERPs. You may want to sort by the score for longer keyword sets.

df = pd.DataFrame(columns = ['Keyword','Keyword SERP Sim'])

df['Keyword'] = keywords

df['Keyword SERP Sim'] = serp_comp_keyword_list

cm = sns.light_palette("green",as_cmap=True)

df2 = df

df2 = df2.style.background_gradient(cmap=cm)

df2

Sample Output

I wanted to see how similar various the SERPs were for names for IBM. The results are not surprising but confirmational (SERP Sim is number is a percentage). The lesson here is that Google treats “big blue” and “Watson” a bit more ambiguous and thus we can still use these keywords, but they should not be alone when describing IBM, IBM, or a similar keyword is needed for context.

Conclusion

So now you have a framework for analyzing your keyword SERP differences SEO. Remember to try and make my code even more efficient and extend it in ways I never thought of! I can think of a few ways we can extend this script:

- Extract and compare by URL not just domain for granularity

- Pipe in search volume and other metrics

- Use Value SERP instead of SERP API

- Compare SERP features in each keyword SERP

- Sort dataframe by score values

If you’re into SERP analysis, see my other tutorial on calculating readability scores.

Now get out there and try it out! Follow me on Twitter and let me know your Python SEO applications and ideas!

SERP Similarity FAQ

How can Python be used to compare keyword SERP (Search Engine Results Page) similarity in bulk for SEO analysis?

Python scripts can be developed to fetch and process SERP data for multiple keywords, allowing for the bulk comparison of SERP similarity and gaining insights into search result variations.

Which Python libraries are commonly used for comparing keyword SERP similarity in bulk?

Commonly used Python libraries for this task include requests for fetching SERP data, beautifulsoup for HTML parsing, and pandas for data manipulation.

What specific steps are involved in using Python to compare keyword SERP similarity in bulk?

The process includes fetching SERP data for selected keywords, preprocessing the data, implementing similarity measures, and using Python for analysis and insights related to SEO strategies.

Are there any considerations or limitations when using Python for bulk comparison of keyword SERP similarity?

Consider the variability in SERP layouts, the choice of similarity metrics, and the need for a clear understanding of the goals and criteria for comparison. Regular updates to the analysis may be necessary.

Where can I find examples and documentation for comparing keyword SERP similarity with Python?

Explore online tutorials, documentation for relevant Python libraries, and resources specific to SEO analysis for practical examples and detailed guides on using Python to compare keyword SERP similarity in bulk.

- Build a Custom Named Entity Visualizer with Google NLP - June 19, 2024

- Storing CrUX CWV Data for URLs Using Python for SEOs - January 20, 2024

- Scraping YouTube Video Page Metadata with Python for SEO - January 4, 2024