Readability scores are not a verified SEO ranking factor. I repeat readability scores are not a verified SEO ranking factor. So why care? You might care because not matching the readability level for your audience may result in higher bounce rates, lower engagement, and less conversion. It’s quite simple, your audience expects content to be written a certain way for the subject material. When your content matches your audience’s expectations then magic happens.

In this tutorial, I’m going to show you step-by-step how to calculate your SERP competition’s reading level (also reading time and word count). This will allow you to compare your competition to your own score, but first, let’s chat about the Flesch–Kincaid readability algorithm which we’ll be using. Note there are dozens of other algorithms, Flesch-Kincaid happens to be one of the more commonly used. Also note, Screamingfrog can do the readability calculation easily during a crawl, but hey, that costs money, this script is free.

A readability algorithm known as the Flesch–Kincaid Grade Level uses an algorithm that evaluates the level of complexity based on the average number of words per sentence and the average number of syllables per word to provide a result that ranges from 0 to 18. A score of 18 represents text which is the most difficult to comprehend. It was developed by the US Navy to measure the amount of education someone would have to have to comprehend a piece of writing. The higher the number the easier it would be to read and a lower number would mean that many people may struggle.

| Score | School level (US) | Notes |

|---|---|---|

| 100.00–90.00 | 5th grade | Very easy to read. Easily understood by an average 11-year-old student. |

| 90.0–80.0 | 6th grade | Easy to read. Conversational English for consumers. |

| 80.0–70.0 | 7th grade | Fairly easy to read. |

| 70.0–60.0 | 8th & 9th grade | Plain English. Easily understood by 13- to 15-year-old students. |

| 60.0–50.0 | 10th to 12th grade | Fairly difficult to read. |

| 50.0–30.0 | College | Difficult to read. |

| 30.0–10.0 | College graduate | Very difficult to read. Best understood by university graduates. |

| 10.0–0.0 | Professional | Extremely difficult to read. Best understood by university graduates. |

* source wikipedia

Important: A higher or lower number does not indicate performance. One is not better than the other. It all depends on your audience. If your content is about theoretical physics, maybe lower is better. If you’re teaching 8-year-olds about trees, then maybe higher is better.

Now that you have some necessary background, let’s dive into the script!

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax is understood

- Some SERP scraper like SerpAPIor ValueSERP

- Access to a Linux installation (I recommend Ubuntu) or Google Colab

- Be careful with copying the code as indents are not always preserved well

- A limitation of the script is that it won’t be able to scrape URLs that are javascript rendered. These URLs will output extremely low word counts. This usually effects 10% of SERPs.

Install and Import Modules

Listed below are the modules we’ll be using in this script:

- google-search-results: for calling SERPAPI to scrape the SERP

- textstat: this includes the flesch kincaid readability algorithm

- bs4: to help scrape URL content

- requests: for connecting to URLs and APIs

- pandas: for storing the data

- statistics: for calculating the median

- json: for processing the return data from the SerpAPI

Let’s first start by installing the SerpAPI, textstat, and bs4 modules. Remember if you’re using a notebook, include an exclamation mark at the beginning.

pip3 install google-search-results pip3 install textstat pip3 install bs4

Now we import the libraries we’ll need to start the script. Each’s uses are listed above.

import requests import textstat from bs4 import BeautifulSoup import pandas as pd from serpapi import GoogleSearch import json from statistics import median

Create SERPAPI Function

Next, we’ll create the basic function for calling SerpApi. It takes in the query, the number of results requested, and your API key. Adjust the parameters as you need. Be sure to reference their docs here. If you are using a different SERP scraper like ValueSERP then you must make the adjustments here depending on what they require for their API call.

def serp(query, num_results, api_key):

params = {

"q": query,

"location": "United States",

"hl": "en",

"gl": "us",

"google_domain": "google.com",

"device": "desktop",

"num": num_results,

"api_key": api_key}

search = GoogleSearch(params)

results = search.get_dict()

return results

Create SERP URL Scraper

Next is the function that takes in the response from SerpAPI. The response is all the details of the SERP for the query you used in JSON format. We simply loop over all the organic results and store each in a Python list for later. If mydomain variable, which represents your site, is found we’ll store that separately.

def get_serp_comp(results, mydomain):

serp_links = []

mydomain _url = "n/a"

mydomain _rank = "n/a"

for count, x in enumerate(results["organic_results"], start=1):

serp_links.append(x["link"])

if mydomain in x["link"]:

mydomain _url = x["link"]

mydomain _rank = count

return serp_links, mydomain_url, mydomain_rank

Process URL Content and Calculate Readability

The next part is a large function that I will break down using an ordered list of what is happening. It accomplishes taking the SERP URL list, scraping the competitor pages, and then calculating the readability score.

- Create lists to store our data and a few default values along with the header variable when we scrape sites.

- Loop through the SERP links we found earlier

- Scrape each URL’s content filtering out common boilerplate areas.

- Call the textstat module and feed in the current URL’s content and return the readability score

- Store the rounded numerical readability score value and calculate the reading time by dividing by 60. We also assume a fairly standard of 25ms per character for default reading speed.

- Calculate and store the word count

- If the page belongs to you, mark it and calculate the readability scores

- Once done looping through the URLs, we calculate median reading levels, reading times, and word counts in aggregate.

def get_reading_level(serp_links,mydomain):

reading_levels = []

reading_times = []

word_counts = []

mydomain_reading_level= "n/a"

mydomain_reading_time= "n/a"

mydomain_word_count= "n/a"

headers = {'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36'}

for x in serp_links:

res = requests.get(x,headers=headers)

html_page = res.text

soup = BeautifulSoup(html_page, 'html.parser')

for tag in soup(["script", "noscript","nav","style","input","meta","label","header","footer","aside",'head']):

tag.decompose()

page_text = (soup.get_text()).lower()

reading_level = int(round(textstat.flesch_reading_ease(page_text)))

reading_levels.append(reading_level)

reading_time = textstat.reading_time(page_text, ms_per_char=25)

reading_times.append(round(reading_time/60))

word_count = textstat.lexicon_count(page_text, removepunct=True)

word_counts.append(word_count)

if mydomain in x:

mydomain_reading_level = int(round(reading_level))

mydomain_reading_time = round(reading_time/60)

mydomain_word_count = word_count

reading_levels_mean = median(reading_levels)

reading_times_mean = median(reading_times)

word_counts_median = median(word_counts)

return reading_levels, reading_times, word_counts, reading_levels_mean, reading_times_mean, word_counts_median, mydomain_reading_level, mydomain_reading_time, mydomain_word_count

Initiate the Functions

Here is where the script actually starts with some basic variables to set. The keyword will be the query that we analyze the SERP for. Num_requests is how many SERP positions to return. mydomain variable will identify your site, so fill that in. The results function calls SerpAPI. The get_serp_comp looks through the results to store just the organic URLs and then the get_reading_level loops through the URL list to scrape the content and calculate our reading levels, times, and counts.

keyword = 'xbox specifications' num_results = 10 api_key = '' #add your serpapi key mydomain = '' #add just your root domain, no www results = serp(keyword,num_results,api_key) links, mydomain _url, mydomain _rank = get_serp_comp(results,mydomain) reading_levels, reading_times, word_counts, reading_levels_mean, reading_times_mean, word_counts_median, mydomain_reading_level, mydomain_reading_time, mydomain_word_count = get_reading_level(links,mydomain)

Output URL Data to Dataframe

Now we output all the data we calculated into a dataframe. The style properties are just a quality of life add that aligns the table values to the left.

df = pd.DataFrame(columns = ['url','reading ease','reading time (m)','word count'])

df['url'] = links

df['reading ease'] = reading_levels

df['reading time (m)'] = reading_times

df['word count'] = word_counts

df = df.style.set_properties(**{'text-align': 'left'})

df = df.set_table_styles(

[dict(selector = 'th', props=[('text-align', 'left')])])

df

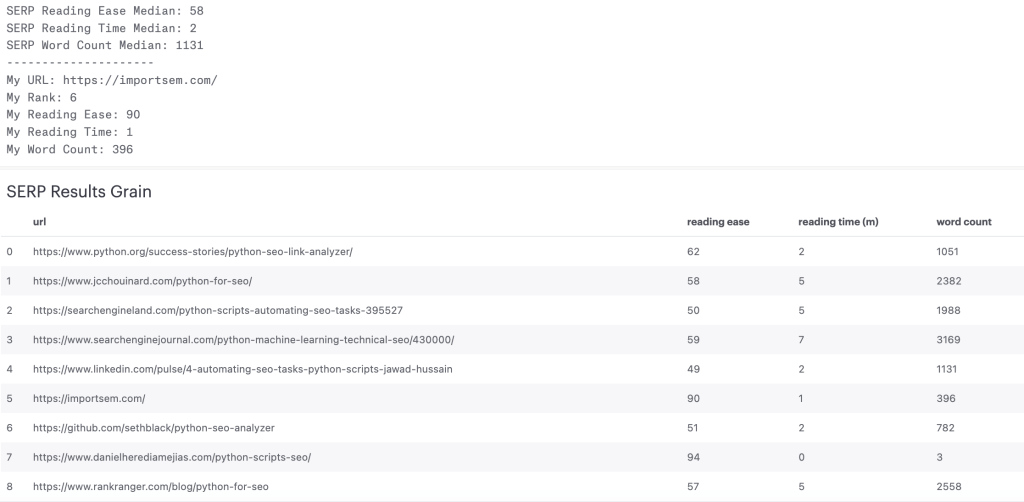

Output Aggregate Data

Here I got lazy and we’re just printing out the median metrics and compared to your site. You could also store and output in a dataframe.

print("SERP Reading Ease Median: " + str(reading_levels_mean))

print("SERP Reading Time Median: " + str(reading_times_mean))

print("SERP Word Count Median: " + str(word_counts_median))

print("---------------------")

print("mydomain URL: " +str(mydomain_url))

print("mydomain Rank: " + str(mydomain_rank))

print("mydomain Reading Ease: " +str(mydomain_reading_level))

print("mydomain Reading Time: " + str(mydomain_reading_time))

print("mydomain Word Count: " + str(mydomain_word_count))

Example Output

Conclusion

Readability is an obscure and unknown SEO factor. Take it as a “95 out of 100 things” to be focusing on. In general, this is a good measure of how your content stacks up against your competition but that doesn’t mean your competition is writing at the level they should be either. Mostly this is to get you thinking about the audience for your content and how to be written in a way they will be most receptive to it. Remember to always keep extending the scripts and customize them to your own needs. This is just the beginning.

Now get out there and try it out! Follow me on Twitter and let me know your Python SEO applications and ideas!

SERP Readability FAQ

How can Python be used to calculate SERP (Search Engine Results Page) rank readability scores?

Python scripts can be developed to fetch SERP data, analyze the content’s readability, and calculate readability scores for each result, providing insights into the readability of top-ranking pages.

Which Python libraries are commonly used for calculating SERP rank readability scores?

Commonly used Python libraries for this task include requests for fetching SERP data, beautifulsoup for HTML parsing, and nltk for natural language processing and readability analysis.

What specific steps are involved in using Python to calculate SERP rank readability scores?

The process includes fetching SERP data for targeted queries, extracting content, applying readability analysis techniques, and using Python for calculating readability scores.

Are there any considerations or limitations when using Python for this purpose?

Consider the variability in SERP content, the choice of readability metrics, and the need for a clear understanding of the goals and criteria for calculating readability scores. Regular updates to the analysis may be necessary.

Where can I find examples and documentation for calculating SERP rank readability scores with Python?

Explore online tutorials, documentation for relevant Python libraries, and resources specific to readability analysis and SEO strategies for practical examples and detailed guides on using Python for this purpose.

- Build a Custom Named Entity Visualizer with Google NLP - June 19, 2024

- Storing CrUX CWV Data for URLs Using Python for SEOs - January 20, 2024

- Scraping YouTube Video Page Metadata with Python for SEO - January 4, 2024