Generate a 404 Redirect List for SEO with Polyfuzz Using Python

We’ve all had a client where we pop in their Google Search Console or ahrefs account and see they have hundreds or thousands of reported 404s. Perhaps from a migration or perhaps a decade of regular pruning. This tutorial won’t cover evaluating whether they are worth redirecting or not, but rather simply the case if you do decide the lot is worth redirecting. You then have a couple of choices. You can either grind through them in a Google Sheet manually matching them or you could have Python do it for you in 30 seconds at a reasonable quality level.

In this tutorial, I’m going to show you how easy it is to match 404s to existing content and generate a redirect list using a Python module called polyfuzz for fuzzy matching. We are simply generating a similarity score between a broken URL and a current URL. See notes below for best results:

Four notes:

- Not all matches are confident. In this script, I only keep matches with a similarity score equal to or greater than 0.875 (out of 1).

- I would still manually scan the results for matches that don’t make sense.

- I would not use this script on URL lists greater than 10,000 as the processing can be slow.

- Works best with SEO-friendly URLs, not if you’re using URLs with product IDs or category IDs.

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab.

- Site URL CSV file for current URLs with a URL column labeled “Address”

- Site URL CSV file for 404 URLs with a URL column labeled “URLs”

Import Modules

- pandas: for importing the URL CSV files, storing and exporting results

- polyfuzz: handles the fuzzy matching processing

Before we begin, remember to watch the indents of anything you copy here as sometimes the code snippets don’t copy perfectly.

First, we’ll install the polyfuzz module in your terminal that will do the bulk of the work for us! If you are using Google Colab put an exclamation mark at the beginning.

pip install polyfuzz

Next, we import the polyfuzz and pandas modules

from polyfuzz import PolyFuzz import pandas as pd

Now we simply import the two CSV files that contain both our current live URLs and then the broken 404 list. We also ask for your root domain to filter it out as it will skew the fuzzy matching results.

Import the URL CSV files

broken = pd.read_csv("404.csv")

current = pd.read_csv("current.csv")

ROOTDOMAIN = "" #Ex. https://www.domain.com

We now transform our URL columns in both the broken URL dataframe and current URL dataframe while filtering out the root domain as this will skew similarity scores for each match since the domain is a commonality for every URL. We essentially just want the path.

Convert dataframes to Lists

broken_list = broken["URL"].tolist() broken_list = [sub.replace(ROOTDOMAIN, '') for sub in broken_list] current_list = current["Address"].tolist() current_list = [sub.replace(ROOTDOMAIN, '') for sub in current_list]

Create the Polyfuzz model

Next, we create the EditDistance model of Polyfuzz and pipe in the two lists.

model = PolyFuzz("EditDistance")

model.match(broken_list, current_list)

We now use the get_matches() function of Polyfuzz to generate the matches. This can take longer depending on the number of URLs you have to process.

df = model.get_matches()

That’s all the heavy lifting. It’s amazing how much you can do with so little code! Now we just sort the dataframe by similarity decreasing and round the values to 3 places.

Polish and Prune the Results

df = df.sort_values(by='Similarity', ascending=False) df["Similarity"] = df["Similarity"].round(3)

From my testing the matching gets a little suspect when the score is less than .857, so below I am dropping any rows that are less than that. Feel free to adjust that number for whatever seems to work for you.

index_names = df[ df['Similarity'] < .857 ].index amt_dropped = len(index_names) df.drop(index_names, inplace = True)

Lastly, for redirecting we’ll add back the domain name to make the “from” part of the list go to an absolute URL.

df["To"] = ROOTDOMAIN + df["To"]

print("Rows Dropped: " + amt_dropped)

df

Conclusion

So there you have it! How easy was that!? You can now redirect some of your 404 pages found in GSC. With this framework, you can extend it in so many interesting directions.

Thanks to @Hou_Stu and @DataChaz for the inspiration for this tutorial!

Now get out there and try it out! Follow me on Twitter and let me know your applications and ideas!

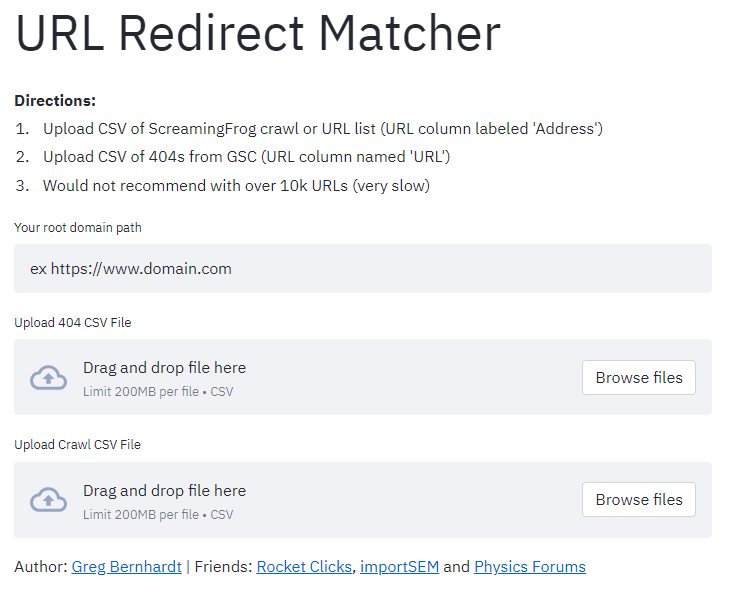

Try out the app!

Direct link to the app:

https://redirect-matcher.herokuapp.com/

PolyFuzz FAQ

- Evaluate Subreddit Posts in Bulk Using GPT4 Prompting - December 12, 2024

- Calculate Similarity Between Article Elements Using spaCy - November 13, 2024

- Audit URLs for SEO Using ahrefs Backlink API Data - November 11, 2024