Automatically Find SEO Interlinking Opportunities with Python

Interlinking has been a core SEO task since the very beginning. The ability to funnel users and PageRank to relevant pages can prove to be a powerful strategy. However, even for a blog with just 100 posts, this can often be a cumbersome task to manage manually. It’s easy to find interlinking opportunities if you have a super tight category and tagging structure, but more times than not, that is not the case. So, how can we use Python to help speed up this process of interlinking discovery?

In this tutorial, I’m going to show you how to create a usable framework for attempting this auto interlinking discovery feat without any machine learning. I do have plans to make this a Streamlit app so stayed tuned! Below is the broad outline:

- Import a URL and title list

- Clean and tokenize the page body text and page title text

- Check to see if any keywords in the title are in the page body text of every other URL

- Store if there are matches

Disclaimer

Now, I will say, this is more of a “beta” script. I’ll be upfront and honest that I expect many Python veterans will scoff at this rather inefficient approach and in fact, I’m not even confident it will be useful for everyone. I do think it’s worth publishing to see where it can be improved, offer to everyone as a starting point, and hoping it is can be an inspiration for something very useful. This script will not work for every site. It works best with a smallish URL list, say under 500 URLs, and with diverse content. If your content is very tightly related, odds are, you’re going to get an unmanageable amount of linking opportunities. With that disclaimer out of the way, let’s dig in!

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax understood

- Access to a Linux installation (I recommend Ubuntu) or Google Cloud Platform (some alliterations will need to be made)

- URL list CSV with URL column labeled “Address” and title column “Title 1” (from ScreamingFrog in this tutorial)

Import Modules

- pandas: for creating dataframes for data storage

- requests: for making HTTP calls to URLs

- bs4: Beatutifulsoup, the defacto HTML/XML parser

- nltk: light natural language toolkit

- nltk.download(‘punkt’): For content tokenizing

- nltk.download(‘averaged_perceptron_tagger’): For tagging word types

- string: helps processing strings and access to useful string functions

- re: for regular expressions

Let’s first import the modules needed for this script expressed above.

import pandas as pd

import requests

from bs4 import BeautifulSoup

import nltk

nltk.download('punkt')

nltk.download('averaged_perceptron_tagger')

import string

import re

Create Word Exclusion List

The list below is what helps make this script become useful. It is the word exclusion list. Often blogs or general sites that have a strong identity will have many common words. We don’t want these common words in our evaluation or everything is going to trigger as an opportunity. Below is the word list I used to process the blog for Rocket Clicks, the SEO agency I work for. These words are found on just about every page. It’s not uncommon for you to have a list 2-3 times as long and need to add as you test the script many times over till you get it useful. This is the most important step to get right or you’ll end up with every URL matching for everything.

exwords = ['company','tips','ad','ads','site','google','ppc','seo','marketing','web','website','search','search engine','rocket','clicks']

URL Page Scraper, Cleaner, and Tokenizer Function

Now we create a function to handle scraping the body content from your URL list, tokenize, clean and then send the content back as a list. We’ll loop in each URL into this function. To start we’ll create an empty list to collect all the links on the URL so we can match them against the URL we’re trying to look for opportunities. Obviously, we don’t want to consider if there is already a link o that page to the URL being processed for. Next, we make the requests HTTP call to the URL and grab the HTML. Then create the BS4 object using that HTML.

def scrapeURL(url): blogtext_links = [] response = requests.get(url).text soup = BeautifulSoup(response, "html.parser")

We continue our function by selecting the text only in the div with class “entry-content clr“. This is specific to the WordPress blog I am using for this script. You must customize this yourself. The point is that we only want to be evaluating the main body of content for that page. In this case, the body of the blog content is in that div. Use inspect tool to find the block that houses your main content and modify the code below. Lastly, we scan that body of content for links and store them in the blogtext_links list we created earlier. We will use that to match against whatever URL we are comparing to and if that URL is already linked, we pass.

bloghtml = soup.find("div", {"class": "entry-content clr"})

blogtext = bloghtml.get_text()

blogtext_links = [a.get('href') for a in bloghtml.findAll('a', href=True)]

The next part is about cleaning and filtering the content to enough to be able to be useful when comparing. To start, we tokenize the body content by sending it through the nltk.tokenize.word_tokenize() function. This places each word as an item in a list. Next, we have several list comprehensions. I fully expect there are much more efficient and intelligent methods, but this is what I came up with and works.

- 1st filters out punctuation from the tokenized list using the string and re modules.

- 2nd sets the words to lowercase as upper and lower cases are considered unique

- 3rd evaluates against the word exclusion list we created. If there is a match, it’s removed.

After the 3rd we have a decent keyword list, but we can do much more. We’ll now use the nltk.pos_tag() function to tag each word with its type. aka, nouns, verbs, adjectives. etc. This creates a list of lists, for example [[‘bear’,’NN][‘Obama’,’NNP’]].

Now we do a couple more comprehensions:

- 4th removes any words from the list that aren’t nouns. Nouns have the tags NN, NNS, NNP, NNPS (for plural and proper nouns). You may want to keep verbs too as sometimes they are descriptively important. You can see a complete list of word type tags here.

- 5th removes the word type tag as it’s no longer needed and makes the list contain singular words.

Next, we rebuild the list and append the URL to the list so we know which page the list of keywords belongs to. Lately, we return the token list for that URL and the list of links on the page.

text_tokens = nltk.tokenize.word_tokenize(blogtext)

text_tokens = [t for t in text_tokens if not re.match('[' + string.punctuation + ']+', t)]

text_tokens = [t.lower() for t in text_tokens]

text_tokens = [t for t in text_tokens if t not in exwords]

text_tokens = nltk.pos_tag(text_tokens)

text_tokens = [x for x in text_tokens if x[1] in ['NN','NNS','NNP','NNPS']]

text_tokens = [x[0] for x in text_tokens]

text_tokens_clean = []

[text_tokens_clean.append(x) for x in text_tokens if x not in text_tokens_clean]

text_tokens_clean.append(url)

return text_tokens_clean, blogtext_links

Page Title Cleaner and Tokenizer Function

Next, we create another function that tokenizes and cleans each of the URL’s titles. They are very similar and perhaps a veteran Python user could suggest a consolidated method, but in the end, this works too. The only real difference is at the end when we append an arbitrary “%” character at the end of the URL matching the title. I do this so that when we compare the title list to the page text list they aren’t canceled out due to the matching URL. We need that URL data as a unique identifier for any given list.

def scrapetitle(title, url):

text_tokens = nltk.tokenize.word_tokenize(title)

text_tokens = [t for t in text_tokens if not re.match('[' + string.punctuation + ']+', t)]

text_tokens = [t.lower() for t in text_tokens]

text_tokens = [t for t in text_tokens if t not in exwords]

text_tokens = nltk.pos_tag(text_tokens)

text_tokens = [x for x in text_tokens if x[1] in ['NN','NNS','NNP','NNPS']]

text_tokens = [x[0] for x in text_tokens]

text_tokens_clean = []

[text_tokens_clean.append(x) for x in text_tokens if x not in text_tokens_clean]

text_tokens_clean.append(url + "%")

return text_tokens_clean

Import URL and Title CSV

Now that the two functions that clean the lists are completed we move to the beginning of the script. A filter list is created to filter page paths in your crawl CSV you don’t want to process. In my case tag and pagination pages. You only want to process pages you want to find interlinking opportunities. Then we load the URL list, only selecting the Address and Title columns, CSV into a dataframe. Then we use that filter list on the Address column. Lastly we covert the dataframe to a dictionary object for easy iteration.

filter = ['/tag/', '/page']

df = pd.read_csv("RC-crawl.csv")[['Address','Title 1']]

df = df[~df['Address'].str.contains('|'.join(filter))].reset_index()

posts = df.to_dict()

Process URLs Page Text

Now it’s time to process the URLs. We set up two empty lists that will house the tokenize page text and one for the links found in that content. Next, we loop through the URLs, send the URL to be processed and return the page text and links list for that URL. Then those lists are added to their respective master lists.

pagetext = [] blogtext_links_master = [] for key,value in posts['Address'].items(): scrapelist, bloglinks = scrapeURL(value) pagetext.append(scrapelist) blogtext_links_master.append(bloglinks)

Process Titles

We do this same process for the URL titles. The only difference is we send the corresponding URL with the title text.

z=0 pagetitle = [] for key,value in posts['Title 1'].items(): pagetitle.append(scrapetitle(value,posts['Address'][z])) z += 1

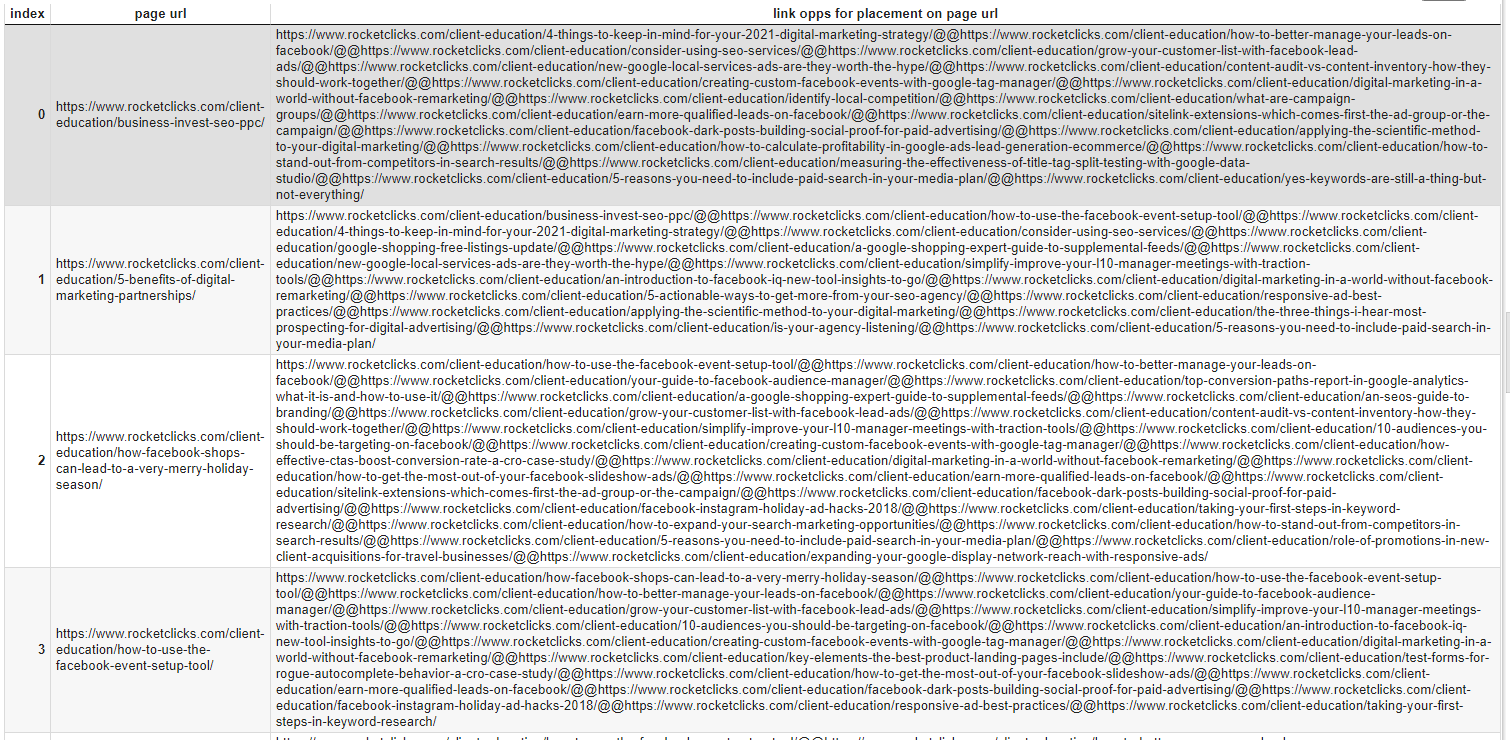

Find Interlinking Opportunities

Here we have the real meat of the script. We first create the empty dataframe that will house the final results and a counter used when seeing if a blog link is already on a given page.

I’ll go through the next part step by step because it’s a little confusing. Overall we’re comparing every title to any given page text.

- Loop through each keyword list in pagetext

- Set a variable to keep track of which pagetext list we’re on

- For any given page text loop through every title and evaluate

- For whatever pagetext we’re on, look at the URL in that list, add a ‘%’ to accurately compare and if it doesn’t match the URL found in the title list, continue

- Compare the two lists, if there is a similarity then continue

- Time to remove the ‘%’ from the pagetitle URL. It’s no longer needed and we don’t want it to show in the final output.

- If the page title URL isn’t already on the page text we’re evaluating then continue

- If this is the first link opportunity add it to the list

- If this isn’t the first link opportunity, find the last row in the list and add the new link opportunity (delineated via “@@”)

df = pd.DataFrame(columns = ['page url', 'link opps for placement on page url'])

r = 0

for i in pagetext:

y = 0

for b in pagetitle:

if i[-1] + '%' != b[-1]:

if bool(set(i) & set(b)) == True:

b[-1] = b[-1].replace('%','')

if b[-1] not in blogtext_links_master[r]:

if y == 0:

df.loc[len(df.index)] = [i[-1], b[-1]]

else:

df['link opps for placement on page url'].iloc[-1] = df['link opps for placement on page url'].iloc[-1] + "@@" + b[-1]

y += 1

r += 1

Export Dataframe to CSV

The final step is saving the dataframe to a CSV file for you to analyze and start interlinking!

df.to_csv("interlink-opps.csv")

Below is a snippet of the output using Rocket Clicks‘s blog URL list. At this point, your job has only just begun. You now have your list of suggested interlinking opportunities. It’s now time to vet them individually and see where they may fit. It’s not uncommon for you to disregard over half of them because of context issues. After all, this script is not intelligent, but in the end, still should save you some time making these considerations manually.

Conclusion

So there you have it! You can now shave off some time in your interlinking tasks. As mentioned in the intro, this script is a work in progress and does require some tweaking to be useful in certain situations. The most important things are to have diverse content, a limited amount of URLs, and a complete word exclusion list. I do have plans to make this a Streamlit app so stayed tuned! I have also thought to run this through Google NLP and match against entities but that can start to become expensive, yet I am still intrigued.

Now get out there and try it out! Follow me on Twitter and let me know your applications and ideas!

Python Interlinking FAQ

- Evaluate Subreddit Posts in Bulk Using GPT4 Prompting - December 12, 2024

- Calculate Similarity Between Article Elements Using spaCy - November 13, 2024

- Audit URLs for SEO Using ahrefs Backlink API Data - November 11, 2024