Any seasoned SEO will know that finding internal linking at scale can be a difficult process but a very important one. This is especially true if your content is not well organized topically.

If you have a blog that is a mess or seemingly full of random articles and you’re tasked with internally linking them in an intelligent way then this tutorial is for you!

In this Python SEO tutorial, I’m going to show you line-by-line how to break down each article’s content into entity N-grams and then compare that set of entities to every other article. Articles with high matches are more likely to be good fits for internal linking.

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax is understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab

- Be careful with copying the code as indents are not always preserved well

Import Modules

- requests: for calling both APIs

- pandas: storing the result data

- nltk: for NLP functions, mini-modules listed below

- nltk.download(‘punkt’)

- from nltk.util import ngrams

- from nltk.corpus import stopwords

- nltk.download(‘stopwords’)

- nltk.download(‘averaged_perceptron_tagger’)

- string: for manipulation of strings

- collections: for item frequency counting

- bs4: for web scraping

- json: for handling API call responses

import requests

import pandas as pd

import nltk

nltk.download('punkt')

from nltk.util import ngrams

from nltk.corpus import stopwords

nltk.download('stopwords')

nltk.download('averaged_perceptron_tagger')

import string

from collections import Counter

from bs4 import BeautifulSoup

import json

Import URLs to Dataframe

The first step is to get your URL CSV for the pages you want to process. In my case, I used ScreamingFrog, so the URL column is titled “Address”. Alter as necessary. I would not run this script on an uncleaned URL list. I would also not run this on thousands of pages or the processing may take quite a long time.

The list of URLs should be only your content pages such as your blog as you’ll see later on when we limit where on the page we scrape text from. the pages need to have the same template.

We then also create a small punctuation filter which you can add to for removal during processing and we create two lists for later on.

df = pd.read_csv("urls.csv")

urls = df["Address"].tolist()

punct = ["’","”"]

ngram_count = []

ngram_dedupe = []

N-gram Function

Next, we create our N-gram function. This will use the NLTK library to split up webpage text into successive N-word combinations. The variable num is N. So if you set the value of Num to 1 then you’re generating 1-grams, if 2 then 2-grams etc.

def extract_ngrams(data, num): n_grams = ngrams(nltk.word_tokenize(data), num) gram_list = [ ' '.join(grams) for grams in n_grams] return gram_list

Google Knowledge Graph API

Now we set up the function to send each n-gram in our list to Google Knowledge Graph API to determine if it’s an entity. This helps clean the n-gram list and ensure we’re matching words that are topical and contextually important. You can easily create a free API here if you don’t already have one. All that happens here is that we loop through our n-gram list and send each one into the API. If we get a hit then we know it’s an entity and add it to a new list we’ll use later. If not a hit then not an entity and we throw it out.

def kg(keywords):

kg_entities = []

apikey=''

for x in keywords:

url = f'https://kgsearch.googleapis.com/v1/entities:search?query={x}&key='+apikey+'&limit=1&indent=True'

payload = {}

headers= {}

response = requests.request("GET", url, headers=headers, data = payload)

data = json.loads(response.text)

try:

getlabel = data['itemListElement'][0]['result']['@type']

hit = "yes"

kg_entities.append(x)

except:

hit = "no"

return kg_entities

This next series of steps I’m going to leave as a large block of code so you can see the entirety of what’s happening and simply chunk out the description of what’s happening. In general, this is where we loop through each URL, scrape the HTML and then process it.

Chunk 1

Here we engage with the BS4 module to take the HTML code we got from the URL. To process the important context of the webpage we want to prune many of the elements of the webpage that are not topical. Usually, this is the boilerplate areas like the header, footer, and sidebar. Then we select only the text from the parent DIV or element that contains the main body content using soup.find("div",{"class":"entry-content"}). You’ll want to edit this to match your template unless it’s WordPress which that code will likely work. Then we lowercase all the text, do a replacement for multiple spaces compress the text into a single line.

Chunk 2

Next, we send the text we just cleaned to the NLTK N-gram function to create the list by whatever we want which is 1 in the code below, but you can change it to say 2. Then we take that N-gram list and clean it some more. We filter out any punctuation, any N-grams with colons, and any items that are stopwords.

Chunk 3

This section is more about list cleaning. We start this section by removing duplicate list items and items that are not string datatypes. Then we filter out POS word types that couldn’t by definition by entities. You can see what the POS word type acronyms are in this guide.

Chunk 4

In this last step, we simply add the newly cleaned to our master lists.

for x in urls:

res = requests.get(x)

html_page = res.text

# CHUNK 1

soup = BeautifulSoup(html_page, 'html.parser')

for script in soup(["script", "noscript","nav","style","input","meta","label","header","footer","aside","h1","a","table","button"]):

script.decompose()

text = soup.find("div",{"class":"entry-content"}) #edit this to match your template

page_text = (text.get_text()).lower()

page_text = page_text.strip().replace(" ","")

text_content1 = "".join([s for s in page_text.splitlines(True) if s.strip("\r\n")])

# CHUNK 2

keywords = extract_ngrams(text_content1, 1)

keywords = [x.lower() for x in keywords if x not in string.punctuation]

keywords = [x for x in keywords if ":" not in x]

stop_words = set(stopwords.words('english'))

keywords = [x for x in keywords if not x in stop_words]

keywords = [x for x in keywords if not x in punct]

# CHUNK 3

keywords_dedupe = list(set(keywords))

tokens = [x for x in keywords_dedupe if isinstance(x, str)]

keywords = nltk.pos_tag(tokens)

keywords = [x for x in keywords if x[1] not in ['CD','RB','JJS','IN','MD','WDT','DT','RBR','VBZ','WP']]

keywords = [x[0] for x in keywords]

# CHUNK 4

attach_url = x

keywords.append(attach_url)

ngram_dedupe.append(keywords)

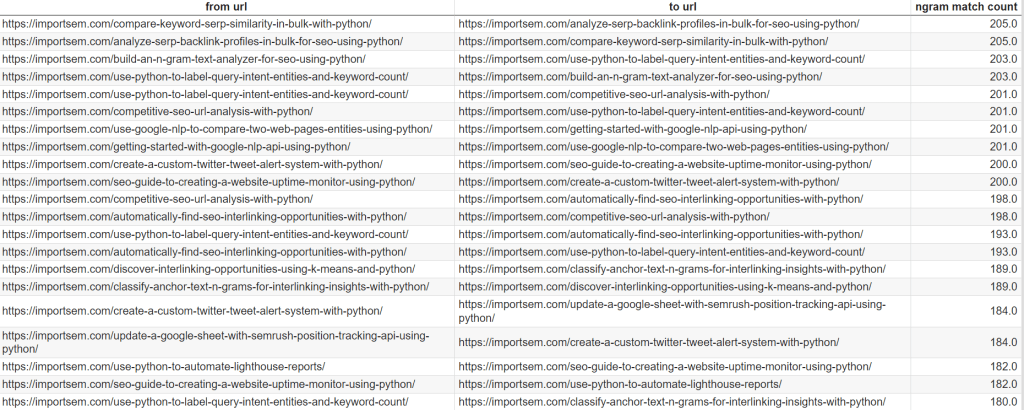

Now we create our empty dataframe where we’ll store our internal linking opportunities. Then we simply compare each URL to each other URL and avoid comparing against itself. Then we add the results for each comparison to a dictionary object and add the to dataframe we created earlier. The match count is stored in ngram_matchs.

d = {'from url': [], 'to url': [], 'ngram match count':[]}

df2 = pd.DataFrame(data=d)

for x in ngram_dedupe:

item_url = x[-1]

for y in ngram_dedupe:

item_url2 = y[-1]

if item_url != item_url2:

ngram_matchs = set(x).intersection(y)

new_dict = {"from url":item_url,"to url":item_url2,"ngram match count":len(ngram_matchs)}

df2 = df2.append(new_dict, ignore_index=True)

All that is left is to print out the results and optionally save the results as a CSV. The higher the match the more likely there is a topical interlinking opportunity between the two pages.

df2 = df2.sort_values(by=['ngram match count'],ascending=False)

df2.to_csv('ngram_interlinking.csv')

df2

Example Output

Conclusion

Internal linking at scale and with large unstructured websites can be a real challenge. Using the framework I’ve built above can help you uncover opportunities across your content. Remember to always keep extending the scripts and customize them to your own needs. This is just the beginning.

Now get out there and try it out! Follow me on Twitter and let me know your Python SEO applications and ideas!

N-Gram Interlinking SEO FAQ

How can Python be utilized to find interlinking opportunities based on entity N-gram matches?

Python scripts can be developed to analyze content, identify entities using N-grams, and discover interlinking opportunities by recognizing commonalities between entities.

Which Python libraries are commonly used for finding interlinking opportunities with entity N-gram matches?

Commonly used Python libraries for this task include nltk for natural language processing, spaCy for entity recognition, and pandas for data manipulation.

What specific steps are involved in using Python for finding interlinking opportunities via entity N-gram matches?

The process includes fetching and processing content, applying NLP techniques for entity recognition using N-grams, and using Python for analysis to identify potential interlinking opportunities.

Are there any considerations or limitations when using Python for this purpose?

Consider the diversity of content, the choice of N-gram lengths, and the need for a clear understanding of the goals and criteria for identifying interlinking opportunities. Regular updates to the analysis may be necessary.

Where can I find examples and documentation for finding interlinking opportunities with Python and entity N-gram matches?

Explore online tutorials, documentation for relevant Python libraries, and resources specific to natural language processing and interlinking strategies for practical examples and detailed guides on using Python for this purpose.

- Build a Custom Named Entity Visualizer with Google NLP - June 19, 2024

- Storing CrUX CWV Data for URLs Using Python for SEOs - January 20, 2024

- Scraping YouTube Video Page Metadata with Python for SEO - January 4, 2024