In this tutorial, we will explore a Python script designed to scrape and analyze YouTube video metadata for free. This framework can become an excellent start for a tool to assist SEOs, content creators, and data analysts.

Note, that paid APIs such as SerpAPI can process requests much faster and reliably but are not free. So if you are okay with waiting a bit longer for a free solution, this script is for you. Otherwise, I’ll be coming out with a new tutorial soon showing how to use SerpAPI.

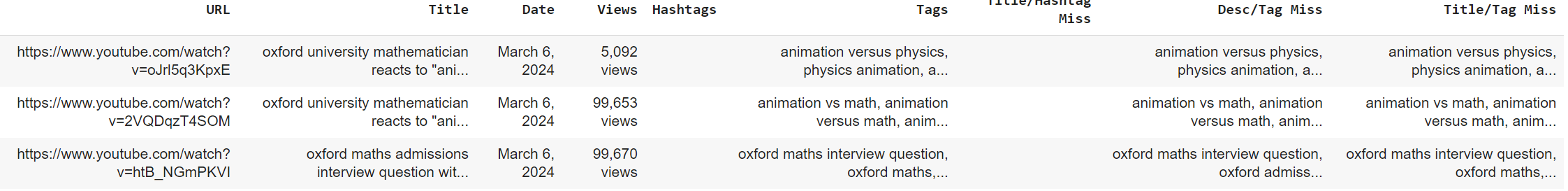

The data we’ll be scraping and storing is: ‘URL’, ‘Title’, ‘Date’, ‘Views’, ‘Hashtags’, ‘Tags’, ‘Title/Hashtag Miss’, ‘Desc/Tag Miss’, ‘Title/Tag Miss’. So, some basic data but also checking if our tags are also included in our title or video description for better relevance strength.

An important step to this is setting up a random crawl delay. This will help prevent Google from detecting a bot and blocking it. The downside is that the delay can be up to 20 seconds between requests, so the crawling can take some time depending on your video count.

This script is just scratching the surface of what you can scrape and analyze. Take this framework and build off it as you need.

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax is understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab

- Be careful with copying the code as indents are not always preserved well

Setting Up the Environment

Before diving into the code, we need to install a required module:

pip3 install fake_useragent

This line installs the fake_useragent package, which helps in simulating browser behavior, allowing our script to mimic a real user when making HTTP requests. Remember if you’re using a notebook like Google Colab or Jupyter, include an exclamation mark at the beginning.

Importing Libraries

The script imports several Python libraries essential for its operation:

from bs4 import BeautifulSoup import requests import pandas as pd import time import re import numpy as np

bs4(BeautifulSoup) is used for web scraping.requestsallows the script to send HTTP requests.pandasis essential for data manipulation and analysis.timeis used to handle time-related tasks.reprovides regular expression matching operations.numpysupports large, multi-dimensional arrays and matrices.

Scrape Metadata Using RegEx and BS4

This function get_youtube_info is the core of the script:

def get_youtube_info(url,crawl_delay):

header = {"user-agent": 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36'}

time.sleep(crawl_delay)

request = requests.get(url,headers=header,verify=True)

soup = BeautifulSoup(request.content,"html.parser")

tags = soup.find_all("meta",property="og:video:tag")

titles = soup.find("title").text.lower()

try:

getdesc = re.search('description\":{\"simpleText\":\".*\"',request.text)

desc = getdesc.group(0).lower()

desc = desc.replace('description":{"simpleText":"','')

desc = desc.replace('"','')

desc = desc.replace('\n','')

except:

desc = "n/a"

try:

getdate = re.search('[a-zA-z]{5}\s[0-9]{1,2},\s[0-9]{4}',request.text)

vid_date = getdate.group(0)

except:

vid_date = "n/a"

try:

getviews = re.search('[0-9,]+\sviews',request.text)

vid_views = getviews.group(0)

except:

vid_views = "n/a"

try:

hashtags = re.search('n#.*","isC', request.text)

hashtags = hashtags.group(0).lower()

hashtags = hashtags.replace('","isc','').replace('n#','#')

except:

hashtags = ''

return tags,titles,vid_date,desc,vid_views, hashtags

It takes a YouTube video URL and a crawl delay as arguments, sets up headers to mimic a browser request, respects the website’s crawl-delay policy, and extracts various metadata like tags, title, description, date, views, and hashtags using regex.

Find Tag Matches in Title and Desc

This function compares video descriptions with tags to see if the descriptions contain the hashtags. This is to help determine relevance strength within the video page content:

def tag_matches(desc, vid_tag_list):

matches = ""

for x in vid_tag_list:

if (desc.find(x) == -1):

matches += x + ","

return matches

Reading YouTube URLs and Setting Delays

The script reads a list of YouTube URLs from a CSV file and generates crawl delays between each URL scrape:

df = pd.read_csv("urls.csv")

urls_list = df['url'].to_list()

delays = [*range(10,22,1)]

This step ensures that the script mimics human behavior and avoids anti-bot mechanisms. Note this can cause a large process time if you are running against a long URL list. it can take up to 22 seconds between requests.

Creating the DataFrame

A DataFrame df2 is created for organizing the scraped data:

df2 = pd.DataFrame(columns=['URL', 'Title', 'Date', 'Views', 'Hashtags', 'Tags', 'Title/Hashtag Miss', 'Desc/Tag Miss', 'Title/Tag Miss'])

Looping Through URLs

The script loops through each URL, scraping and processing the data for hashtag matches in title/desc and some soft data cleaning:

for x in urls_list:

crawl_delay = np.random.choice(delays)

vid_tags,title,vid_date,desc,views, hashtags = get_youtube_info(x,crawl_delay)

vid_tag_list = ""

for i in vid_tags:

vid_tag_list += i['content'] + ", "

desc_tag_matches = tag_matches(desc,vid_tag_list.split(','))

title_tag_matches = tag_matches(title,vid_tag_list.split(','))

title_hashtag_matches = tag_matches(title,hashtags.replace('#','').split(' '))

title = title.replace(' - YouTube','')

Compiling the Results

Each video’s data is compiled and added to the DataFrame using a dictionary:

dict1 = {'URL': x, 'Title': title, 'Date': vid_date, 'Views': views, 'Hashtags': hashtags, 'Tags': vid_tag_list, 'Title/Hashtag Miss': title_hashtag_matches, 'Desc/Tag Miss': desc_tag_matches, 'Title/Tag Miss': title_tag_matches}

df2 = pd.concat([df2, pd.DataFrame([dict1])], ignore_index=True)

Final DataFrame

The script concludes by creating a comprehensive DataFrame containing all the extracted and processed data, invaluable for analysis. Then the data is exported to a CSV file.

df2

df2.to_csv("vid-list.csv")

Example Output

Conclusion

This Python script automates the process of data extraction and analysis for YouTube metadata, providing insights into content performance. It’s valuable for content creators and SEO marketers looking to optimize their YouTube strategy.

Now get out there and try it out! Follow me on Twitter and let me know your Python SEO applications and ideas!

FAQ: Analyzing YouTube Metadata with Python

What is the purpose of this Python script for YouTube metadata analysis?

This script is designed to scrape and analyze metadata from YouTube videos. It’s useful for SEOs, content creators, and data analysts who want to understand video performance metrics like tags, titles, descriptions, upload dates, view counts, and hashtags.

Why do we need to install fake_useragent?

The fake_useragent package is used to mimic a web browser’s user-agent. This is crucial for making the script’s HTTP requests appear as if they are coming from a real browser, thereby reducing the chances of being blocked by YouTube’s anti-scraping measures.

What role does BeautifulSoup play in this script?

BeautifulSoup is a Python library for parsing HTML and XML documents. It is used in this script to parse and extract data from the HTML content of YouTube pages, such as video tags, titles, and other metadata.

How does the script ensure it adheres to YouTube’s crawling policies?

The script includes a crawl delay, implemented using the time.sleep function, to respect YouTube’s server load and abide by good web scraping practices. This delay is randomized to mimic human browsing patterns.

What data does the script extract from YouTube videos?

The script extracts various pieces of metadata including video tags, titles, descriptions, upload dates, view counts, and hashtags.

This FAQ and the Hero image were AI-assisted

- Build a Custom Named Entity Visualizer with Google NLP - June 19, 2024

- Storing CrUX CWV Data for URLs Using Python for SEOs - January 20, 2024

- Scraping YouTube Video Page Metadata with Python for SEO - January 4, 2024