Detect Web Page Technologies with BuiltWith API and Python

For SEO audits, one area you may want to detect and store the different technologies a website is using. Sure you can spot check and run a few console commands, but what if you could have an API do it all for you. The service BuiltWith has just that capability! BuiltWith has several very interesting APIs you’ll want to look at, but this tutorial will focus on the “Domain API“. This API returns back a list of technologies detected on the website or things like DNS. Technologies like, are they using PHP, GTM, jQuery, Google Fonts, WordPress, IPv6, and hundreds more.

When you understand the technologies being used you can be better prepared to tackle issues as you understand their environment better. Additionally, BuiltWidth returns some additional information like some domain ranking scores and social account detection. See the full API documentation for full capabilities.

Note that BuiltWith has a free web interface to get this information. The advantage of using the API as always is to break free the data for you to store, scale, manipulate, visualize and automate.

Also, note that BuiltWith has a free API with limited functions, but most require credits, as does the API used in this tutorial. In general, I find the API costs fairly reasonable. I believe it is 2000 API calls for $100.

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab

Import Modules

- json: for handling the API response

- requests: for making the API call

import json import requests

Build API Call

Now that the modules have been installed and imported we need to set our domain variable which contains the site domain you want to use and use that domain variable in our API call we will store in the variable url. There are several parameters you can use for the API call. You can see their documentation here. We just need three:

- key: your API key for authentication

- liveonly: only send current information, not historical, which is an option

- lookup: the site domain you want to check

Be sure to add your own API Key to the URL.

domain = "rocketclicks.com" url = "https://api.builtwith.com/v14/api.json?KEY=YOUR_API_KEY&liveonly=yes&LOOKUP="+domain

Next, we build the GET API call using the URL variable we built and store the response in the variable data. We also create a couple of empty variables to store the tech and social data in later. You could use a pandas dataframe, but we’ll just do a simple console printout.

payload = {}

headers= {}

response = requests.request("GET", url, data = payload)

data = response.json()

technology=""

social=""

Process API Response

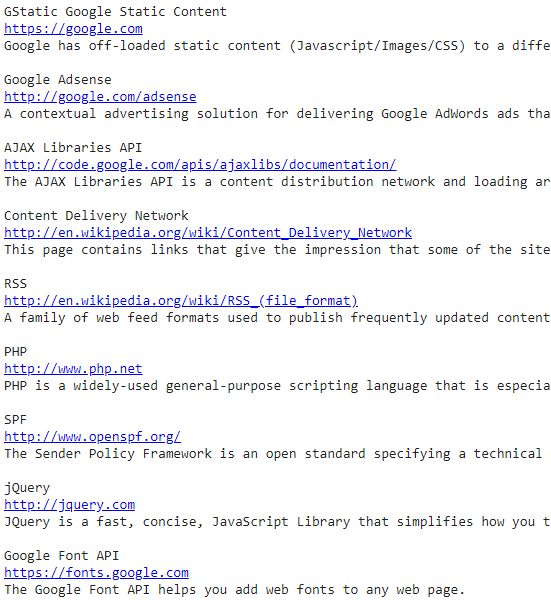

We now have our API JSON response in the data. Let’s print out the name, documentation link, and description of each technology detected on the domain.

for result in data["Results"][0]["Result"]["Paths"]:

for tech in result["Technologies"]:

technology += tech["Name"] + "\n" + tech["Link"]+ "\n" + tech["Description"] + "\n"

Output

Note, this output image is cropped in width and height. There were dozens of technologies listed.

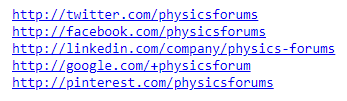

Let’s move on to printing out the detect social media account for the domain. The value is the URL of the social account. We’ll use a try/except because there could be domains without a detected social media account.

try:

for value in data["Results"][0]["Meta"]["Social"]:

social += str(value) + "\n"

except:

social="n/a"

Social Output

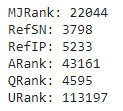

Next, we can print out the Majestic Rank, Referring Subnets, Referring IPs, Ahrefs Rank, QuantCast Rank, and Umbrella Rank. Note, you’ll only get data from these metrics if your domain is considered in the top 1M sites (by Majestic).

print("MJRank: " + str(data["Results"][0]["Attributes"]["MJRank"]))

print("RefSN: " + str(data["Results"][0]["Attributes"]["RefSN"]))

print("RefIP: " + str(data["Results"][0]["Attributes"]["RefIP"]))

print("ARank: " + str(data["Results"][0]["Meta"]["ARank"]))

print("QRank: " + str(data["Results"][0]["Meta"]["QRank"]))

print("URank: " + str(data["Results"][0]["Meta"]["Umbrella"]))

Stats Output

As mentioned before there are other data returned. I only highlighted what I felt was most interesting, but to see everything just loop through the entire JSON response using the code below.

for key,value in data["Results"][0]["Attributes"].items():

print(str(key) + ":" + str(value))

for key,value in data["Results"][0]["Meta"].items():

print(str(key) + ":" + str(value))

That’s all folks! As always be sure to follow me and let me know how this script is working for you and how you are extending it.

Python and BuiltWith API FAQ

How can I use Python to detect web page technologies using the BuiltWith API?

Employ Python scripts to interact with the BuiltWith API, sending requests to detect and retrieve the technologies utilized by a given web page.

What Python libraries are commonly used for making API requests to BuiltWith in order to detect web page technologies?

The requests library is commonly used in Python for making HTTP requests. It can be employed to send requests to the BuiltWith API and retrieve information about the technologies used on a web page.

What types of technologies can be detected using the BuiltWith API with Python?

The BuiltWith API can identify a variety of technologies employed on a web page, including content management systems, web servers, analytics tools, JavaScript frameworks, and more.

Are there any specific considerations or limitations when using the BuiltWith API to detect web page technologies with Python?

Consider the rate limits imposed by the BuiltWith API, handle authentication requirements, and be aware that the accuracy of detected technologies may vary based on the complexity of the web page.

Where can I find examples and documentation for using Python with the BuiltWith API to detect web page technologies?

Explore the official BuiltWith API documentation for comprehensive guides and examples. Additionally, refer to online tutorials and Python resources for practical demonstrations and implementation details.

- Evaluate Subreddit Posts in Bulk Using GPT4 Prompting - December 12, 2024

- Calculate Similarity Between Article Elements Using spaCy - November 13, 2024

- Audit URLs for SEO Using ahrefs Backlink API Data - November 11, 2024