Competitive SEO URL Analysis with Python

Match your URLs to your competitor’s URLs, find title keyword and ranking keyword count differences with this step-by-step Python SEO tutorial. SEO is not an island. You are not simply improving your site/pages in a vacuum. You need to consider your competition as you all are jockeying for positions in the same SERPs. Some URLs win and some lose.

How do you know where to start looking? For one-off keywords and URLs, it’s pretty easy to do manually, but what if you wanted to quickly understand which of your URLs are likely competing with which URLs of your competitors? Read ahead, Python to the rescue!

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab.

- Screaming Frog crawl CSV of your site, named you.csv

- Screaming Frog crawl CSV of your competitor’s site, named comp.csv

- Semrush API key (optional)

Import and Install Modules

- pandas: for importing the URL CSV files, storing and exporting results

- polyfuzz: handles the fuzzy matching processing of URLs

- requests: for making API calls to Semrush

- re: for matching and capturing base domain from URL

- json: for processing Semrush API response

- nltk: for NLP word tagging in titles

Before we begin, remember to watch the indents of anything you copy here as sometimes the code snippets don’t display/copy perfectly. Also, note my code efficiency and naming conventions are admittedly poor in this tutorial. Reach out if you are unsure what something is and or rename them for your own usage.

First, we’ll install the polyfuzz and NLTK module in your terminal that will do the bulk of the work for us! If you are using Google Colab put an exclamation mark at the beginning of each.

pip install polyfuzz

pip install nltk

Once those are installed we’re good to import the modules into our script. The “punkt” NLTK download is for tokenizing and “averaged_perceptron_tagger” for word tagging.

import pandas as pd

import requests

import re

import json

from polyfuzz import PolyFuzz

import nltk

nltk.download('punkt')

nltk.download('averaged_perceptron_tagger')

Variable and Crawl Data Set-Up

Now let’s set up a few important variables. Later on, we’re going to be tokenizing and comparing differences between page titles. To be most efficient and precise we want to strip out anything that is boilerplate or out of context to that page. 9 times out of ten this is the end branding in a title. Lastly, insert your Semrush API key found in your profile account settings.

url_branding1 = "" #ex. | ABC Tools url_branding2 = "" #ex. - Waldofy Accounting apikey = ""

Next, we import the crawl CSVs into dataframes, including only the columns we’re going to use. Note the names of the files below. Rename your files to match them or vice versa.

df_you = pd.read_csv(you.csv)[['Address','Status Code','Indexability','Title 1']] df_comp = pd.read_csv(comp.csv)[['Address','Status Code','Indexability','Title 1']]

Now we’re going to select the first single value in the Address column for each dataframe. You’ll see why in the following code block below.

domainrecord1 = df_you["Address"].loc[0] domainrecord2 = df_comp["Address"].loc[0]

With those two values, we’re going to extract the domain address using regex to filter out during URL matching and also the root domain without TLD for naming purposes later on. To accomplish this we use the search() function in the re module we imported.

getdomain1 = re.search('^[^\/]+:\/\/[^\/]*?\.?([^\/.]+)\.[^\/.]+(?::\d+)?\/', domainrecord1 ,re.IGNORECASE)

if getdomain1:

domain1 = getdomain1.group(0)

name1 = getdomain1.group(1)

getdomain2 = re.search('^[^\/]+:\/\/[^\/]*?\.?([^\/.]+)\.[^\/.]+(?::\d+)?\/', domainrecord2 ,re.IGNORECASE)

if getdomain2:

domain2 = getdomain2.group(0)

name2 = getdomain2.group(1)

Crawl Dataframe Clean Up

Now it’s time to clean up the crawl dataframes to be ready for processing. The list below follows each line in the code chunk.

- Filter out any URLs with “page” in the structure. Pagination pages are rarely important to compare.

- Filter out anything other than URLs with status code “200”.

- Filter out anything other than URLs that are indexable.

- Strip out the root domain address from each URL.

- Strip out the branding from each title

df_you = df_you[~df_you['Address'].str.contains('page')]

df_you = df_you[df_you['Status Code'] == 200]

df_you = df_you[df_you['Indexability'] == 'Indexable']

df_you['Address'] = df_you['Address'].str.replace(domain1,'')

df_you['Title 1'] = df_you['Title 1'].str.replace(url_branding1,'')

df_comp = df_comp[~df_comp['Address'].str.contains('page')]

df_comp = df_comp[df_comp['Status Code'] == 200]

df_comp = df_comp[df_comp['Indexability'] == 'Indexable']

df_comp['Address'] = df_comp['Address'].str.replace(domain2,'')

df_comp['Title 1'] = df_comp['Title 1'].str.replace(url_branding2,'')

URL Fuzzy Matching

Next, convert each dataframe’s Address columns to lists for easier polyfuzz processing.

comp_list = df_comp['Address'].tolist() you_list = df_you['Address'].tolist()

Now we send both Address lists into the Polyfuzz function for fuzzy matching processing. The model we will use is editdistance. We sort by Similarity score (1 is a perfect match), round by 3 places, and then drop any rows that have a score of less than .857 which I have found to be the point where lower than that and it becomes unreliable.

model = PolyFuzz("EditDistance")

model.match(you_list, comp_list)

df_results = model.get_matches()

df_results = df_results.sort_values(by='Similarity', ascending=False)

df_results["Similarity"] = df_results["Similarity"].round(3)

index_names = df_results[ df_results['Similarity'] < .857 ].index

df_results.drop(index_names, inplace = True)

Merge Matching Data into Master Dataframe

We now have our dataframe with URL matches. We want to bring that back into each of the original dataframes that contained a lot of useful data. We’ll use Pandas merge() function to first merge the fuzzy matched URL dataframe into the master dataframe that you first imported with your site’s crawl data. Then we merge the resulting dataframe into the initial competitor crawl dataframe. There will be some duplicate columns so we’ll drop those and rename the existing columns using your domain name and your competitor’s domain name for easier labeling. Lastly, we add back in the root domain paths we initially took out for the fuzzy matching.

df_merge1 = pd.merge(df_results, df_you, left_on='From', right_on='Address', how='inner')

df_merge1 = df_merge1.drop(columns=['Address','Indexability', 'Status Code'])

df_merge1 = df_merge1.rename(columns={"From": name1 +" URL", "To": name2 + " URL", "Title 1": name1 +" Title"})

df_merge2 = pd.merge(df_merge1, df_comp, left_on= name2 + ' URL', right_on='Address', how='inner')

df_merge2 = df_merge2.drop(columns=['Address', 'Indexability', 'Status Code'])

df_merge2 = df_merge2.rename(columns={"Title 1": name2 + " Title"})

df_merge2 = df_merge2[[name1 + " URL"] + [name1 + " Title"] + [name2 + " URL"] + [name2 + " Title"] + ["Similarity"]]

df_merge2[name1 + " URL"] = domain1 + df_merge2[name1 + " URL"]

df_merge2[name2 + " URL"] = domain2 + df_merge2[name2 + " URL"]

Prepare Titles for Keyword Difference Processing

The next part is a big code chunk, but only because it’s the same code, just working on both sets of titles. The first chunk is for your site’s titles and the second, your competitors.

- Make your titles into a list

- Loop through that list

- Split each title into a list delineating by space characters.

- Sometimes empty items can occur, if we find one, remove it, if not, continue

- Use NLTK module to word tag each token.

- Filter the list to only include the word tags we want. Mostly nouns and verbs. Adjust as needed.

- Remove the branding pipe from the list

- Remove the word tags from the list once we already have the list filtered. They are no longer needed.

- Repeat for the competitor’s titles

####### tokenize your titles

title_token = df_merge2[name1 + " Title"].tolist()

for z in range(len(title_token)):

title_token[z] = title_token[z].split(" ")

try:

title_token[z].remove('')

except:

pass

title_token[z] = nltk.pos_tag(title_token[z])

title_token[z] = [x for x in title_token[z] if x[1] in ['NN','NNS','NNP','NNPS','VB','VBD']]

title_token[z] = [x for x in title_token[z] if x[0] != '|']

title_token[z] = [x[0] for x in title_token[z]]

####### tokenize competitor titles

title_token2 = df_merge2[name2 + " Title"].tolist()

for z in range(len(title_token2)):

title_token2[z] = title_token2[z].split(" ")

try:

title_token2[z].remove('')

except:

pass

title_token2[z] = nltk.pos_tag(title_token2[z])

title_token2[z] = [x for x in title_token2[z] if x[1] in ['NN','NNS','NNP','NNPS','VB','VBD']]

title_token2[z] = [x for x in title_token2[z] if x[0] != '|']

title_token2[z] = [x[0] for x in title_token2[z]]

Find Title Keyword Differences

Next, we want to figure out the difference between the keywords found in your title and your competitor’s title for each matched URL. We first create an empty list to store the keywords we find. Then we loop through the titles and by using the set() function very easily return the difference. We do this for each URL match and add it to the container list. Once all matches are processed we add the list to a series dataframe and add that series to the main dataframe. We now have our title keyword differences!

#title difference title_diff = [] for x in range(len(title_token)): diff = list(set(title_token[x]) - set(title_token2[x])) + list(set(title_token2[x]) - set(title_token[x])) title_diff.append(diff) diff_series = pd.Series(title_diff) df_merge2["Title Difference"] = diff_series

Semrush API for Ranking Keyword Differences

Now we prepare to find each matched URL’s ranking keywords via Semrush API. We do this as an indicator for which URLs we should be paying attention to. Just because two URLs match and there are title differences doesn’t mean much right. The important information is which competitor pages are outperforming your pages. Those are the ones you want to focus on. The method I choose was to see if your competitor was ranking for more keywords. If they are, that is the sign you want to spend the time and compare these pages deeper.

df_comp3 = df_merge2[name2 + " URL"].tolist() df_you3 = df_merge2[name1 + " URL"].tolist()

This is another large code chunk but again, half is for your URLs and the second half for your competitor’s. A function could be in order, but ya know, I’m lazy.

- First, we create a variable to house the total number of ranking keywords for each URL. Note for API cost sake, I have capped this to 50. If you want more, up this number, but it will cost you more credits per keyword returned.

- Loop through the URL list, if no API key is found, we’ll just exit and an empty list will be added to the master dataframe

- Make the Semrush API call. See the documentation for more parameters.

- For some insane reason, this API report is returned as text and not JSON. That means it’s obnoxious to process. I do it a lazy way. Each keyword is on a separate line. There are two heading lines at the top. So I simply split the lines, count the lines and subtract 2 and that gives us the keyword count. We add each count to the master list and at the end add the master list to the dataframe.

- Repeat for the competitor URLs

######### get num of keywords per your URL

keyword_count = []

for x in df_comp3:

if apikey != '':

url = f'https://api.semrush.com/?type=url_organic&key={apikey}&display_limit=50&url={x}&database=us'

payload={}

headers = {}

response = requests.request("GET", url, headers=headers, data=payload)

response = response.text.split("\n")

keyword_count.append(len(response)-2)

else:

keyword_count.append(0)

df_merge2[name2 + " Keywords"] = keyword_count

############# get num of keywords per competitor URL

keyword_count2 = []

for x in df_you3:

if apikey != '':

url = f'https://api.semrush.com/?type=url_organic&key={apikey}&display_limit=50&url={x}&database=us'

payload={}

headers = {}

response = requests.request("GET", url, headers=headers, data=payload)

response = response.text.split("\n")

keyword_count2.append(len(response)-2)

else:

keyword_count2.append(0)

df_merge2[name1 + " Keywords"] = keyword_count2

Now we do some list comprehension, subtracting the two keyword counts for each list to get the difference in number. A negative number will indicate the competitor is ranking for more keywords for the matched URLs and that is a URL you want to dig deeper on. Lastly, we append the new keyword difference column to the master dataframe.

#add keyword diff to df keydiff = [m - n for m,n in zip(keyword_count2,keyword_count)] df_merge2["Keyword Difference"] = keydiff

Order, Display, and Save Final Dataframe

The last step is to order the dataframe columns into an arrangement that makes sense., print out the dataframe table, and export the CSV file

#save and display df_merge2 = df_merge2[[name1 + " URL"] + [name1 + " Title"] + [name1 + " Keywords"] + [name2 + " URL"] + [name2 + " Title"] + [name2 + " Keywords"] + ["Keyword Difference"] + ["Title Difference"] + ["Similarity"]] print(df_merge2) df_merge2.to_csv(name1 + "VS" + name2 +".csv")

Conclusion

So there you have it! How easy was that!? You can now match up your URLs with your competitors, see what keywords are different in the titles, and prioritize optimization by keyword ranking difference. With this framework, you can extend it in so many interesting directions. Remember to try and make my code even more efficient. I’d love to see some functions used!

Now get out there and try it out! Follow me on Twitter and let me know your applications and ideas!

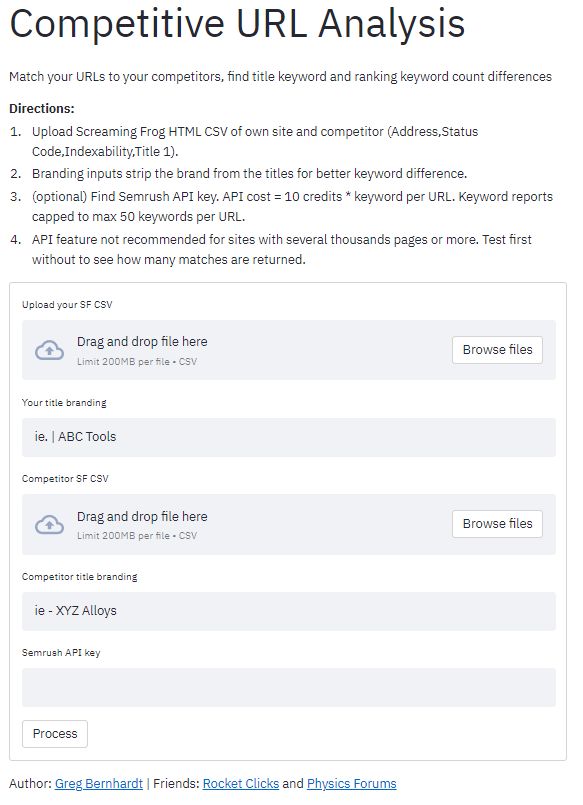

Be sure to check out the app for this tutorial and see my other apps available.

SEO URL Analysis FAQ

How can Python be employed for competitive SEO URL analysis?

Python scripts can be crafted to analyze URLs from competitors’ websites, extracting valuable information such as structure, keywords, and other SEO-related insights.

Which Python libraries are commonly used for competitive SEO URL analysis?

Python libraries like requests for fetching URLs, BeautifulSoup or lxml for parsing HTML content, and urlib for URL manipulation are commonly utilized in competitive SEO URL analysis.

What specific information can be extracted from competitors’ URLs using Python for SEO insights?

Python scripts can extract data such as URL structure, keywords present in URLs, metadata, and any patterns that competitors follow in organizing their URLs. This information aids in understanding their SEO strategies.

Are there any considerations or limitations when using Python for competitive SEO URL analysis?

Consider ethical aspects of web scraping, comply with website terms of service, and be aware of potential variations in HTML structure. Additionally, ensure that the analysis aligns with ethical SEO practices.

Where can I find examples and documentation for competitive SEO URL analysis with Python?

Explore online tutorials, documentation for web scraping libraries like BeautifulSoup, and resources specific to Python for SEO analysis for practical examples and detailed guides on competitive SEO URL analysis using Python.

- Evaluate Subreddit Posts in Bulk Using GPT4 Prompting - December 12, 2024

- Calculate Similarity Between Article Elements Using spaCy - November 13, 2024

- Audit URLs for SEO Using ahrefs Backlink API Data - November 11, 2024