Analyze SERP Backlink Profiles in Bulk for SEO Using Python

The importance of the backlink as a quality signal has changed little since the early 2000s. The algorithms have evolved, but backlinks remain a strong ranking factor.

The act of looking up their own backlink stats and comparing them to competitors is an SEO staple. What can be new in this process is leveling up the operations of the activity and being a little smarter. Let’s reduce one-off competitor checks, start analyzing in bulk, and who/why is most important according to backlink data for X keyword via SERP scraping.

In this Python SEO tutorial, I’m going to show you step-by-step how to build a script to scrape the SERPs for a given keyword and then analyze the top X sites in that SERP to find many backlinks Insights.

There are dozens of metrics we can get from ahrefs to analyze, but in this tutorial, we’re going to focus on:

- ahrefs URL rank

- Backlinks count

- Referring domain count

- Top anchor text count by backlinks

- Top anchor text count by referring domain

- % where a query one-gram is in an anchor text (I think this one is really cool!)

- Average ahrefs link rank of backlinks

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax is understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab

- SERPapi or similar service (will need to modify API call if not SERPapi)

- ahrefs API access

- Be careful with copying the code as indents are not always preserved well

Install Python Modules

The only module that likely needs to be installed for your environment is the SERPapi module vaguely called google-search-results. If you are in a notebook don’t forget the exclamation mark in front.

pip3 install google-search-results

Import Python Modules

- requests: for calling both APIs

- pandas: storing the result data

- json: handling the response from the APIs

- serpapi: interfacing with the serpapi api

- urllib.parse: for encoding and decoding URLs for passing to the APIs

- seaborn: a little pandas table conditional formatting fun

import requests import pandas as pd import json from serpapi import GoogleSearch import urllib.parse import seaborn as sns

Setup API Variables

First, let’s just set up our key variables for each API. These keys will often be found in each platform’s account sections. Be sure to protect them.

ahrefs_apikey = "" serp_apikey = ""

Let’s set up some variables for the SERPapi call. See their full API documentation for many additional parameters should you need them.

- query: this is the query you want to search for

- location: the country you want to communicate the search is coming from

- lang: the language the query search is in

- country: similar to location, you usually want these to align

- result_num: how many results you want to be returned. 8 usually is the first page, 16 is two pages, etc, etc

- google_domain: the country-specific domain you want to search from (ex. google.com or google.fr). This usually aligns with some of the parameters above.

query = "" location = "" lang = "" country = "" result_num = "" google_domain = ""

Make SERP API Call

Next, we build the simple API call using the parameters above (add any additional you want), run it, and receive the results in JSON form. Simple!

params = {

"q": query,

"location": location,

"hl": lang,

"gl": country,

"num": result_num,

"google_domain": google_domain,

"api_key": serp_apikey}

search = GoogleSearch(params)

results = search.get_dict()

Setup Container Lists and Dataframe

Now we simply create our list variables where our data will be temporarily stored and then moved to the pandas dataframe where it will ultimately live. Any extra metric analysis you want to store will need to be added as additional columns. Note I am also splitting the query by spaces and creating a list of one-grams that we will use for anchor text analysis compared to the query. We will find out how strong the backlink anchor text is topically compared to the query.

df = pd.DataFrame(columns = ['URL', 'UR', 'BL','RD','Top Anchor RD','Top Anchor BL',"%KW in Anchors"])

keyword_gram_list = query.split(" ")

urls = []

kw_an_ratio_list = []

backlinks_list = []

backlink_UR_list = []

refdomains_list = []

rank_list = []

top_anchor_rd = []

top_anchor_bl = []

Process SERP Result URLs

Now we want to start analyzing the top X sites for the query you used. We start by looping through the results provided by SERPapi. The next step will be hitting the ahrefs API, but before that, we need to encode each SERP result URL as we need to pass the URL via an HTTP parameter. FYI, most of the below tutorial should be within this loop, until we start processing some of the anchor text and appending to the dataframe.

for x in results["organic_results"]: urls.append(urllib.parse.quote(x["link"]))

Retrieve Backlinks Count

Once we have the URL encoded (making special characters parameter friendly) we can start the first ahrefs call. We’ll need 4 different calls because the data we need is spread across 4 different API report endpoints. See the full ahrefs API documentation for understanding and modifying the URL parameters. This first call is to get backlink and referring domain counts. Once we make the call, we simply load the values into our lists to use later.

for x in urls: apilink = "https://apiv2.ahrefs.com/?token="+ahrefs_apikey+"&target="+x+"&limit=1000&output=json&from=metrics_extended&mode=exact" get_backlinks = requests.get(apilink) getback = json.loads(get_backlinks.text) backlinks = getback['metrics']['backlinks'] backlinks_list.append(backlinks) refdomains = getback['metrics']['refdomains'] refdomains_list.append(refdomains)

Retrieve URL Rank

The next ahrefs API we call is to get the URL rank.

apilink = "https://apiv2.ahrefs.com/?token="+ahrefs_apikey+"&target="+x+"&limit=1000&output=json&from=ahrefs_rank&mode=exact" get_rank = requests.get(apilink) getrank = json.loads(get_rank.text) rank = getrank['pages'][0]['ahrefs_rank'] rank_list.append(rank)

Retrieve Backlink Avg Rank

The third API call is to get the URLs’ entire backlink profile (might need to adjust the limit parameter in the URL) and avg the ahref ranks.

apilink = "https://apiv2.ahrefs.com/?token="+ahrefs_apikey+"&target="+x+"&limit=2000&output=json&from=backlinks&order_by=ahrefs_rank%3Adesc&mode=exact" get_bl_rank = requests.get(apilink) getblrank = json.loads(get_bl_rank.text) all_bl_ratings = [] for y in getblrank['refpages']: all_bl_ratings.append(y['ahrefs_rank']) bl_avg_ur = round(sum(all_bl_ratings)/len(all_bl_ratings)) backlink_UR_list.append(bl_avg_ur)

Retrieve Anchor Text

The fourth and last API call is to retrieve all the anchor text for the URL’s backlinks. We’ll use this for finding the highest frequency anchor text and % of n-gram query in the anchor text profile.

apilink = "https://apiv2.ahrefs.com/?token="+ahrefs_apikey+"&target="+x+"&limit=3000&output=json&from=anchors&mode=exact" get_anchor = requests.get(apilink) getanchor = json.loads(get_anchor.text)

Query 1-grams in Anchor Text and Top Referring Domain Anchor Text

Next, we’ll check how many of the one-gram words from the query appear in all of the anchor texts. This can be a great way to measure topical and keyword signal strength in your backlink anchor texts. We’ll use that final count to find the percentage in total later on. Lastly, we also determine the anchor text by frequency count. As we loop through the backlink data we simply compare the current data to the previous and set the variable according to which is greater.

kw_an_count = 0

for count, x in enumerate(getanchor['anchors']):

if any(x['anchor'].find(check) > -1 for check in keyword_gram_list):

kw_an_count += 1

if count == 0:

an_rd = x['refdomains']

top_an_rd = x['anchor']

else:

if an_rd < x['refdomains']:

an_rd = x['refdomains']

top_an_rd = x['anchor']

else:

pass

Find Top Anchor Text By Backlinks

Now we do essentially the same for backlinks. The reason I am doing both is that often times you may have a site that links to you via some boilerplate area which ends up sending you thousands of backlinks. This can obviously skew frequency counts, so that is why the above count on referring domains is useful, but we still should compare against the entire backlink profile.

if count == 0:

an_bl = x['backlinks']

top_an_bl = x['anchor']

else:

if an_bl < x['backlinks'] or top_an_bl == "":

an_bl = x['backlinks']

top_an_bl = x['anchor']

else:

pass

if top_an_rd == "":

top_an = "empty anchor"

if top_an_bl == "":

top_an_bl = "empty anchor"

Calculate % Query 1-gram in Anchor Text

With all the hard stuff out of the way we next need to find the anchor text in query ratio and append it to a list. We also append the top anchor text by referring domain and backlinks here.

kw_an_ratio = int((kw_an_count/len(getanchor['anchors']))*100) kw_an_ratio_list.append(int(kw_an_ratio)) top_anchor_rd.append(top_an_rd) top_anchor_bl.append(top_an_bl)

Populate Dataframe and Conditionally Format

The last thing to do is push the data from the lists to the dataframe we created earlier. If you remember we encoded the URLs for the ahrefs API so we simply need to work through that list using compression and decode them so they are human-friendly.

In the end, I thought it would be fun to use the seaborn module to conditionally format with color the columns with numerical metrics for easier scanning. Remember that once you apply the seaborn function, it turns the df essentially into a seaborn object, so do all your processing before applying seaborn or you’ll get errors.

urls = [urllib.parse.unquote(x) for x in urls]

df['URL'] = urls

df['UR'] = rank_list

df['BL'] = backlinks_list

df['Avg BL UR'] = backlink_UR_list

df['RD'] = refdomains_list

df['Top Anchor RD'] = top_anchor_rd

df['Top Anchor BL'] = top_anchor_bl

df['%KW in Anchors'] = kw_an_ratio_list

cm = sns.light_palette("green",as_cmap=True)

df2 = df

df2 = df2.style.background_gradient(cmap=cm)

df2

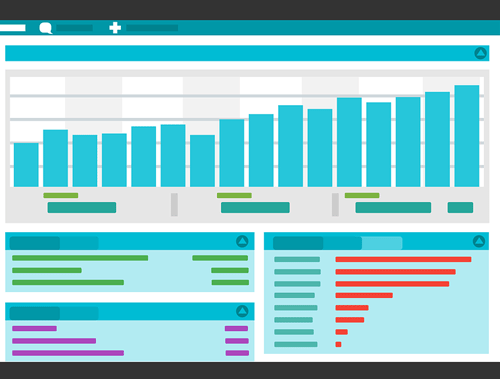

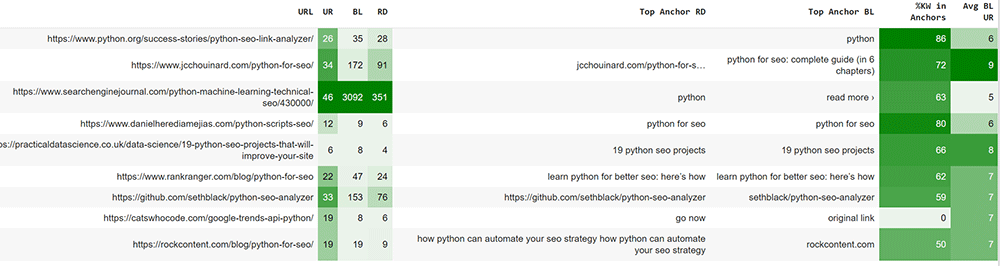

Sample Output

Below is the output for the query “python seo” and grabbing the first page of results. Nice work from some of my Pythonista buddies JC Chouinard, Ruth Everett, Liraz Postan, and Daniel Heredia Mejais. Looks like I have some work to do with ImportSEM to crack the first page! You can see that even though python.org doesn’t have the highest UR, BL, or RD count it has the highest % of the query 1-grams in its anchor text. Big signal!

Conclusion

So now you have a framework for analyzing your SERP competition’s backlink profiles at the query level. Remember to try and make my code even more efficient and extend it in ways I never thought of! I can think of a few ways we can extend this script:

- Scrape the SERP URLs for SEO OPF tests, to find more patterns and correlations.

- Output top n-grams for anchor text by frequency rather than just top 1.

- Output Avg referring domain rank for each URL

- Loop through an entire list of keywords instead of just one

If you’re into SERP analysis, see my other tutorial on calculating readability scores.

Now get out there and try it out! Follow me on Twitter and let me know your Python SEO applications and ideas!

SERP Backlink Profile FAQ

How can Python be utilized to analyze SERP backlink profiles in bulk using Ahrefs for SEO analysis?

Python scripts can be developed to interact with the Ahrefs API, allowing for the bulk analysis of SERP backlink profiles and gaining insights for SEO optimization.

Which Python libraries are commonly used for analyzing SERP backlink profiles with Ahrefs?

Commonly used Python libraries for this task include requests for making API requests, pandas for data manipulation, and other libraries depending on specific analysis requirements.

What specific steps are involved in using Python to analyze SERP backlink profiles with Ahrefs in bulk?

The process includes connecting to the Ahrefs API, fetching backlink data, preprocessing the data, and using Python for in-depth analysis and insights related to SEO strategies.

Are there any considerations or limitations when using Python for bulk analysis of SERP backlink profiles with Ahrefs?

Consider the limitations of the Ahrefs API, potential variations in backlink data, and the need for a clear understanding of the goals and criteria for analysis. Regular updates to the analysis may be necessary.

Where can I find examples and documentation for analyzing SERP backlink profiles with Python and Ahrefs?

Explore online tutorials, Ahrefs API documentation, and resources specific to SEO analysis for practical examples and detailed guides on using Python to analyze SERP backlink profiles in bulk with Ahrefs.

- Evaluate Subreddit Posts in Bulk Using GPT4 Prompting - December 12, 2024

- Calculate Similarity Between Article Elements Using spaCy - November 13, 2024

- Audit URLs for SEO Using ahrefs Backlink API Data - November 11, 2024