Build a Custom Named Entity Visualizer with Google NLP

Entity SEO has been a hot topic since at least 2012/13 when Google released its knowledge graph and the Hummingbird algorithm. It’s a building block concept to semantic SEO and NLU/NLP. We even recently saw the term “entity” at least a few dozen times in the leaked Google API modules. This tutorial will generally assume you have a basic understanding of entities and natural language processing. If not, head over to these two resources: Entity SEO, and Natural Language Processing.

Entity SEO

- Optimizes web content to improve association with specific entities recognized by search engines.

- Entities are distinct, well-defined concepts such as people, places, organizations, events, or things.

- Aims to enhance the visibility and relevance of content related to these entities in search engine results.

Named Entity Recognition (NER)

- Sub-task of information extraction in NLP.

- Focuses on identifying and classifying named entities into categories like person names, organizations, locations, dates, and other proper nouns.

- Crucial for structuring unstructured text for easier analysis and meaningful information extraction.

- Applications include improving search engine results, information retrieval, sentiment analysis, and enhancing machine translation.

- Uses machine learning models trained on annotated datasets to recognize patterns and features indicating named entities.

- Common approaches: Conditional Random Fields (CRFs), Hidden Markov Models (HMMs), and deep learning models like recurrent neural networks (RNNs) and transformers.

One method that can help us understand what entities are in the content that we write is to use a named entity visualizer. This method is not very scalable due to the visualization and is best for analysis of one-off pieces of content.

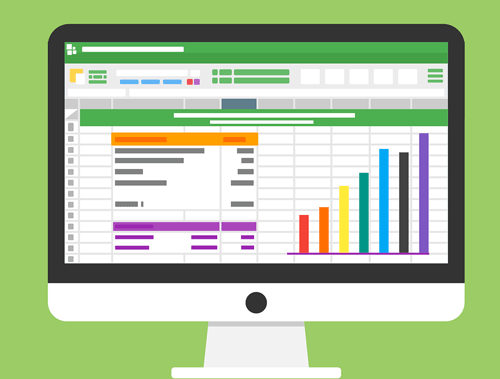

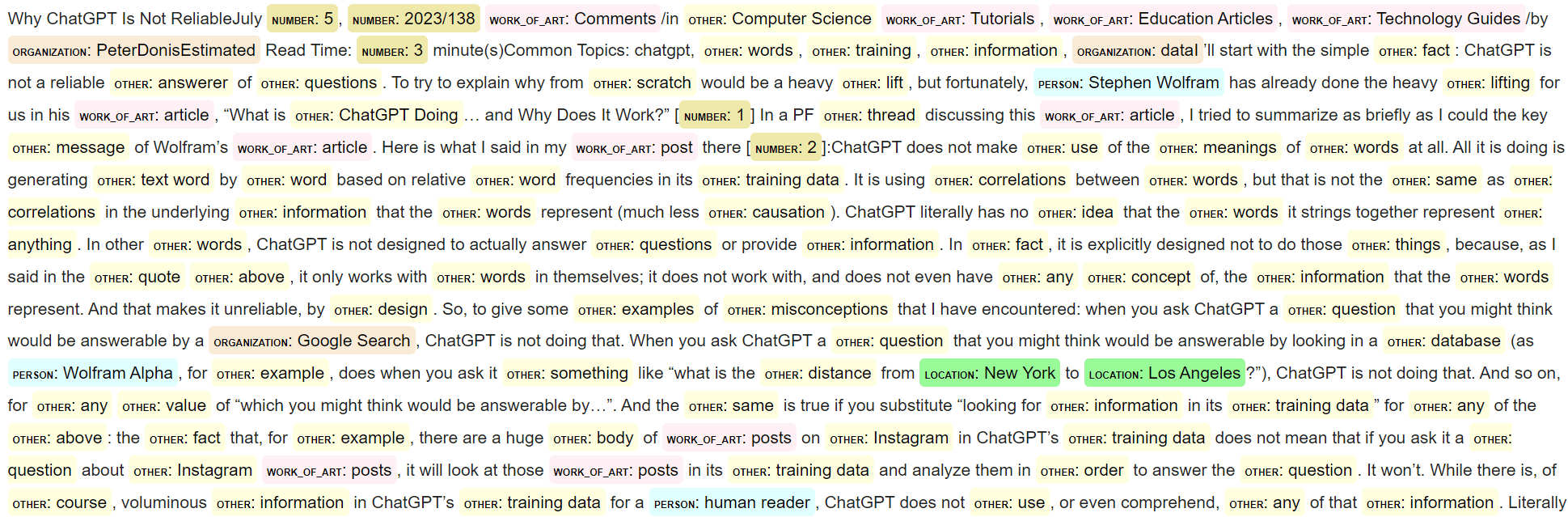

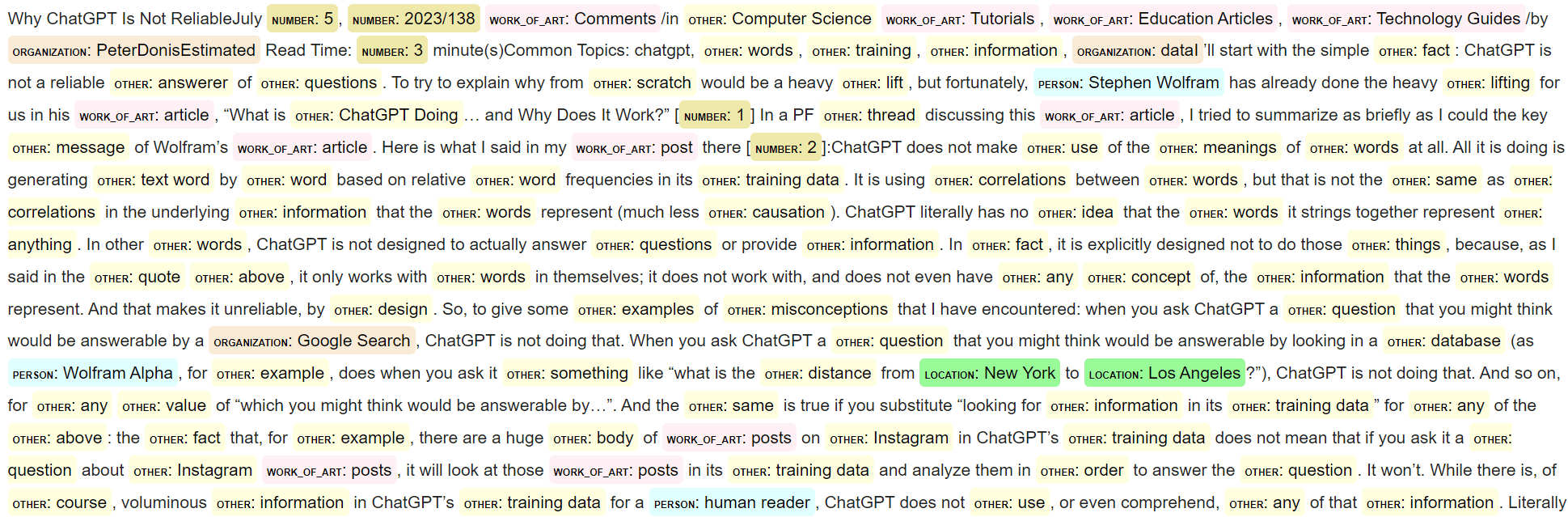

This Python SEO tutorial will help you build the starting framework for processing your text content through Google Natural Language API and then transform it into a visual that labels and color codes entity types. With this information, you can start understanding the entities, entity types, and their relationships in your content easier. It’s pretty easy and without many lines of code!

Note that we could use the free visualizer from spaCy called displaCy, but from testing, their NER is vastly inferior to Google Natural Language and in general, it’s better to get results from Google because you want to get a closer understanding of how they see your content.

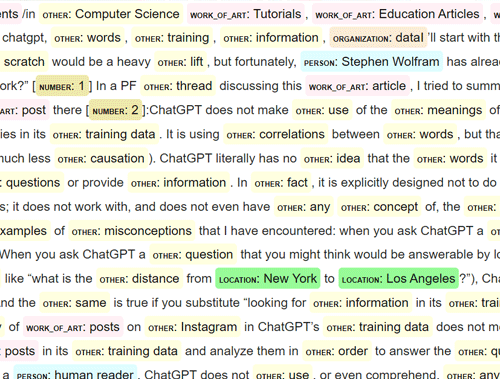

Below is an example of blog content processed by the code we’re going to build in this tutorial for the article Why ChatGPT is not Reliable.

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax is understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab style notebook

- Google Natural Language (CNL) API enabled + service account JSON file

- Basic understanding of entities, NLP, and semantic SEO

- Be careful with copying the code as indents are not always preserved well

Get your CNL API Service Account

Getting a service account for the CNL API is pretty easy. CNL is the API that will be processing for the NER. Ensure you have access to the Google Cloud Platform with a billing account as the Natural Language API is paid but inexpensive. Head over to the Google Cloud Natural Language API page. Then You’ll see a blue “enable” button. Then create a service account in the credentials menu found in the left sidebar for the API and download the JSON key file when asked. That is what we’ll use to authenticate.

Importing Libraries

from google.cloud import language_v1 from google.oauth2 import service_account from IPython.core.display import display, HTML import os import requests from bs4 import BeautifulSoup import re from html import escape

- language_v1: processes the text for NLP

- service_account: handles Google Cloud authentication

- display, HTML: for rendering the HTML that builds the visualizer

- requests: grabs the page text from the specified URL

- BeautifulSoup: parses the source code HTML to get the article text

- re: for the text replacement to label the named entities

- escape: safely handle processing

After importing the above modules let’s create our function that sends the article context to Google Cloud Natural Language API. The explanation of each code line is as follows:

- Load the service account credentials (assuming you renamed your JSON file data.json)

- Initialize the Google Cloud Language API client

- Create a document with content from the provided text

- Analyze entities in the document

- Return the entities found in the text

def analyze_entities(text):

credentials = service_account.Credentials.from_service_account_file("data.json")

client = language_v1.LanguageServiceClient(credentials=credentials)

document = language_v1.Document(content=text, type_=language_v1.Document.Type.PLAIN_TEXT)

response = client.analyze_entities(document=document)

return response.entities

The next step is writing the function that will handle the text replacements for the HTML output for the visualizer. Essentially, it will input the entity list from CNL API and the article text. Then we’ll match each entity back to the text and replace it with some HTML markup for the entity type prefix and color which is defined in the entity type color map. Feel free to alter the colors to a web-safe palette that works for you. Lighter colors work the best.

The function works by the following:

- Escape HTML special characters in text to prevent XSS attacks or unintended HTML rendering

- Define a color map for different types of entities recognized by the Google Cloud Language API

- Perform text replacements for the original text to add the colored labels via each entity type

- Feel free to edit the HTML markup to format the labels in a way that works for you

- Create regex pattern with word boundaries so replacements are done within other words

- Compile a single regex from all the patterns for efficiency

def visualize_entities(text, entities):

text_html = escape(text)

color_map = {

"UNKNOWN": "lightgray",

"PERSON": "lightcyan",

"LOCATION": "PaleGreen",

"ORGANIZATION": "AntiqueWhite",

"EVENT": "Thistle",

"WORK_OF_ART": "LavenderBlush",

"CONSUMER_GOOD": "LightSkyBlue",

"OTHER": "LightYellow",

"PHONE_NUMBER": "MediumSeaGreen",

"ADDRESS": "Salmon",

"DATE": "Honeydew",

"NUMBER": "PaleGoldenrod",

"PRICE": "MistyRose"

}

replacements = {}

for entity in entities:

entity_type = language_v1.Entity.Type(entity.type_).name

color = color_map.get(entity_type, "black")

escaped_entity_name = escape(entity.name)

replacement_html = f"<mark style='background-color: {color}; padding:4px; border-radius:4px;line-height:1.9;'><span style='font-size:8px;font-weight:bold;'>{entity_type}</span>: {escaped_entity_name}</mark>"

pattern = f'\\b{re.escape(escaped_entity_name)}\\b'

replacements[pattern] = replacement_html

regex_patterns = re.compile('|'.join(replacements.keys()), re.IGNORECASE)

Next, let’s build the function that takes the user-specified URL and scrapes the page for text. Note we don’t want to process all text on the page, just the main content of the article. For this, I search for the article HTML tag and take that content. If you don’t use the article tag, then you’ll need to adjust this function to either take the entire page text or find the CSS class that wraps your main content and change it to something like

article_div = soup.find('div', class_='article')

This function behaves as follows:

- Initiate the BeautifulSoup scraper

- Extract text content from the <article> tag

- Fix missing spaces after periods to improve text readability and entity separation

def get_text(url):

html = requests.get(url).text

soup = BeautifulSoup(html, 'html.parser')

article = soup.find('article')

if not article:

return html, "No article tag found in the HTML content."

cleaned_text = article.get_text()

cleaned_text = re.sub(r'\.\s*(?=[A-Za-z])', '. ', cleaned_text)

return html, cleaned_text

Last but not least, we write the part that starts everything off. The function works as follows:

- Input a URL of your choosing

- Send the URL to the function that scrapes the text and returns the HTML and cleaned text from that URL

- Send the cleaned text to the function that processes the text for entities using CNL

- Send the entities and cleaned text to the function that performs the text replacements to build the named entity visualizer

- Use the display and HTML functions to render the new marked-up text with the entity labels.

url = "https://www.physicsforums.com/insights/why-chatgpt-is-not-reliable/" html, clean_text = get_text(url) entities = analyze_entities(clean_text) html_output = visualize_entities(clean_text, entities) display(HTML(html_output))

Example

The output from the above URL is the same as the image I showed in the intro.

Conclusion

So now you have a framework for analyzing and visualizing your named entity in articles using the Cloud Natural Language API. Remember to try and make my code even more efficient and extend it in ways I never thought of! This was just the beginning of what you can start doing. Hopefully, you’re now quick to hop on the semantic SEO bandwagon. Note there are still a few small bugs in the script that require a bit more ironing:

- Preserve original spacing in the article

- Refine methods to make sure some text doesn’t mash together when the text is stitched back together

- The formatting of the output is not perfect, but I’m also not a designer

Now get out there and try it out! Follow me on Twitter and let me know your Python SEO applications and ideas!

Named Entity Recognition FAQ

What are some common challenges in NER?

Common challenges include handling ambiguity (e.g., “Apple” as a fruit vs. the company), dealing with entities not seen during training (out-of-vocabulary issues), and managing variations in entity names (e.g., “U.S.A.” vs. “United States”). Additionally, NER systems must cope with different languages, text styles, and domains.

What datasets are commonly used for training NER models?

Some well-known datasets for NER include the CoNLL-2003 dataset, the OntoNotes dataset, and the ACE (Automatic Content Extraction) corpus. These datasets provide annotated text that includes named entities and their categories.

How do you evaluate the performance of an NER system?

The performance of an NER system is typically evaluated using precision, recall, and F1-score. Precision measures the accuracy of the named entities identified, recall measures the system’s ability to find all relevant named entities, and the F1-score is the harmonic mean of precision and recall, providing a single measure of overall performance.

What are some applications of NER?

Applications of NER include:

- Information Retrieval: Enhancing search engines by indexing named entities for better search results.

- Content Recommendation: Personalizing content based on recognized entities in user interests.

- Customer Support: Automatically categorizing and routing customer inquiries based on detected entities.

- Medical Text Analysis: Identifying medical terms, drugs, and conditions in clinical records.

- Financial Analysis: Extracting company names, stock tickers, and financial events from news articles.

What tools and libraries are available for NER?

Several tools and libraries are available for NER, including:

- SpaCy: An open-source library with pre-trained NER models.

- NLTK (Natural Language Toolkit): Provides various tools for NER, including integration with the Stanford NER tagger.

- Stanford NER: A Java-based library offering state-of-the-art NER models.

- OpenNLP: An Apache project that includes tools for NER.

- AllenNLP: A library built on PyTorch for deep learning-based NLP tasks, including NER.

Can NER be used for languages other than English?

Yes, NER can be applied to various languages. However, the availability of annotated datasets and pre-trained models for different languages can vary. Languages with rich morphology, such as Arabic or Russian, may pose additional challenges for NER systems.

What are the latest advancements in NER?

Recent advancements in NER include the use of transformer-based models like BERT, RoBERTa, and GPT. These models leverage large-scale pre-training on diverse text corpora, enabling better generalization and improved performance on NER tasks. Additionally, transfer learning and multilingual models have also shown promise in enhancing NER capabilities across different languages and domains.

This FAQ section was written with help using GenAI

- Evaluate Subreddit Posts in Bulk Using GPT4 Prompting - December 12, 2024

- Calculate Similarity Between Article Elements Using spaCy - November 13, 2024

- Audit URLs for SEO Using ahrefs Backlink API Data - November 11, 2024