In this tutorial, I’m going to show, using Python, how to generate broad keyword categories using current ranking keyword data and then auto label those keywords with the categories. This is useful in getting a broad overview of what topics you’re ranking the most for and what potential search volumes ceilings are for each category. The biggest opportunities could be in those categories with the largest total volume ceilings. The potential search volumes show estimates of what you could expect if you ranked #1 for every keyword and every user clicked your link. Obviously, not realistic, but the upper bound data is worthwhile.

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab.

- Keyword Data with search volume (Semrush organic research export)

Import Modules and Data Table Extension

Let’s start by importing the pandas module and the data table Google Colab extension (for easier dataframe viewing).

import pandas as pd %load_ext google.colab.data_table

Now we load the CSV keyword data into two different dataframes. I’m confident there is a clear way to do everything we need using one dataframe, but this was easiest for me. In the first dataframe we just need the Keyword column in order to generate the keyword counts we’ll use to auto label them into categories.

df = pd.read_csv("rckey.csv")['Keyword']

df2 = pd.read_csv("rckey.csv")

With the keywords data loaded in both dataframe we’ll start with the first one to generate the keyword categories. The Keyword column contains mostly phrases and to evaluate the word for word we split() the phrases by whitespace in a list, use explode() to create new rows from the words in that list, count the unique words in that column and reset the dataframe index.

df = df.str.split().explode().value_counts().reset_index()

The column likely contains a lot of stop words, so let’s filter those out. Add words to the list as needed.

stop = ['1','2','3','4','5','6','7','8','9','get','ourselves', 'hers','us','there','you','for','that','as','between', 'yourself', 'but', 'again', 'there', 'about', 'once', 'during', 'out', 'very', 'having', 'with', 'they', 'own', 'an', 'be', 'some', 'for', 'do', 'its', 'yours', 'such', 'into', 'of', 'most', 'itself', 'other', 'off', 'is', 's', 'am', 'or', 'who', 'as', 'from', 'him', 'each', 'the', 'themselves', 'until', 'below', 'are', 'we', 'these', 'your', 'his', 'through', 'don', 'nor', 'me', 'were', 'her', 'more', 'himself', 'this', 'down', 'should', 'our', 'their', 'while', 'above', 'both', 'up', 'to', 'ours', 'had', 'she', 'all', 'no', 'when', 'at', 'any', 'before', 'them', 'same', 'and', 'been', 'have', 'in', 'will', 'on', 'does', 'yourselves', 'then', 'that', 'because', 'what', 'over', 'why', 'so', 'can', 'did', 'not', 'now', 'under', 'he', 'you', 'herself', 'has', 'just', 'where', 'too', 'only', 'myself', 'which', 'those', 'i', 'after', 'few', 'whom', 't', 'being', 'if', 'theirs', 'my', 'against', 'a', 'by', 'doing', 'it', 'how', 'further', 'was', 'here', 'than'] df = df[~df['index'].isin(stop)].reset_index()

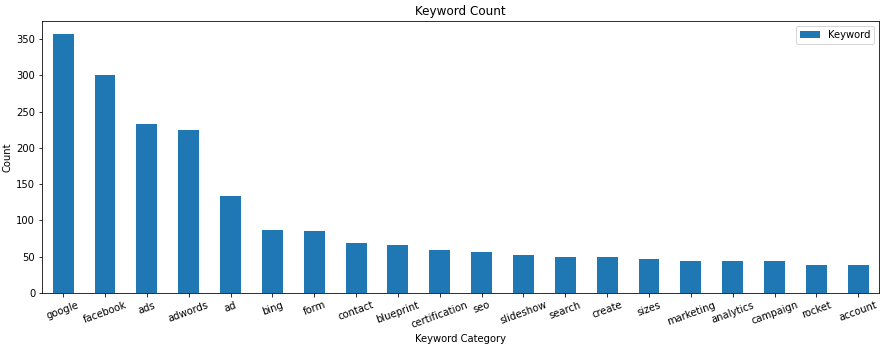

Next we take the top 20 keyword categories and put them in a list. You can alter this or leave it out if you’re saving to a spreadsheet, but for charting 20 is a good number.

topkeywords = df['index'][:20].to_list() print(topkeywords)

![]()

Let’s make a quick chart of the counts for each keyword category

df[:20].plot.bar(y='Keyword', x='index', figsize=(15,5), title="Volume", rot=20)

Next, it’s time to start labeling our keywords with the categories so we can sum up the search volumes. We will be using the second dataframe. We use the function extract() which searches the keyword column for a match using regex and if there is a match, put it in the newly made Marked column. We build our regex using the categories we made from the first dataframe. The variable regex is will end up looking like “word1|word2|word3|word4|word5…” when using join(). For those without a match, we will drop those rows using dropna().

regex = '(' + ('|'.join(topkeywords)) + ')'

df2['Marked'] = df2.Keyword.str.extract(regex)

df2 = df2.dropna()

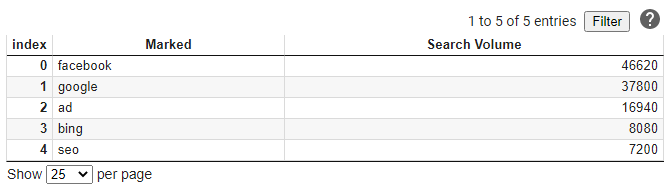

Now let’s group all the same keyword categories together, sum their search volumes, and sort by search volume descending.

df2 = df2.groupby(['Marked'])['Search Volume'].sum().to_frame().sort_values('Search Volume', ascending=False).reset_index()

df2.head()

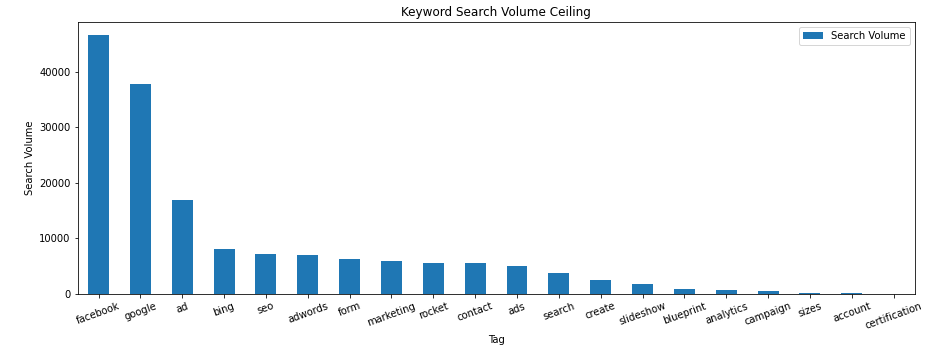

Lately we plot these columns in a graph and can see where our potential volumes for the keywords we rank for.

df2.plot.bar(y='Search Volume', x='Marked', figsize=(15,5), title="Keyword Search Volume Ceiling", xlabel="Tag", ylabel="Search Volume", rot=20)

Conclusion

Now with this framework, you can generate these keyword categories and summed search volumes for any website to mine opportunities. Next tutorial I’ll show how to do this with landing page data and sessions! Now get out there and try it out! Follow me on Twitter and let me know your applications and ideas!

- Build a Custom Named Entity Visualizer with Google NLP - June 19, 2024

- Storing CrUX CWV Data for URLs Using Python for SEOs - January 20, 2024

- Scraping YouTube Video Page Metadata with Python for SEO - January 4, 2024