Calculate Similarity Between Article Elements Using spaCy

In this Python SEO tutorial, we’ll walk through a Python script that uses SpaCy to calculate similarity metrics between content keywords and the body of an article. This analysis can help SEOs and content creators assess content relevance and keyword alignment.

Using Natural Language Processing (NLP), we’ll compute similarity scores to gauge how well keywords match the main content. This script leverages multi-processing to speed up the processing of large datasets.

Table of Contents

Requirements and Assumptions

- Python 3 with SpaCy Installed: You’ll need SpaCy’s

en_core_web_mdlanguage model. - CSV File of Content Data: A CSV file (

blog_data.csv) with columnsbody_text,primary_keyword, andtopic_clustersto analyze.- This CSV should contain the URL, title, primary keyword, and primary topic cluster

Step 1: Install SpaCy Language Model

First, download the SpaCy language model en_core_web_md, which will calculate vector similarities.

!python -m spacy download en_core_web_md > /dev/null

Step 2: Import Libraries

Import the necessary libraries, including SpaCy for NLP, Pandas for data handling, and concurrent.futures for multi-processing.

import pandas as pd

from google.cloud import bigquery

import google.auth

from statistics import mean

from concurrent.futures import ProcessPoolExecutor

import spacy

# Load SpaCy model

nlp = spacy.load("en_core_web_md")

Step 3: Define the Similarity Calculation Function

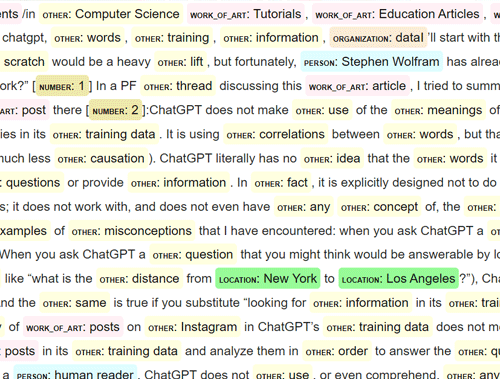

The main function, get_sim, computes the similarity between the article body and keywords using sentence, noun phrase, or entity-level comparisons.

def get_sim(body_text, compare, istype):

sim_set = []

body_text_vec = nlp(body_text)

compare_vec = nlp(compare)

if not compare_vec.has_vector:

return 0.0

if istype == "sent":

for sent in body_text_vec.sents:

if sent.has_vector:

sim_set.append(sent.similarity(compare_vec))

elif istype == "np":

for sent in body_text_vec.sents:

nchunks = [nchunk.text for nchunk in sent.noun_chunks]

nchunk_utt = nlp(" ".join(nchunks))

if nchunk_utt.has_vector:

sim_set.append(nchunk_utt.similarity(compare_vec))

elif istype == "ent":

for sent in body_text_vec.sents:

ent = [ent.text for ent in sent.ents]

ent_utt = nlp(" ".join(ent))

if ent_utt.has_vector:

sim_set.append(ent_utt.similarity(compare_vec))

if sim_set:

sim_mean = round(mean(sim_set), 3)

else:

sim_mean = 0.0

return sim_mean

Step 4: Process Each Row of Content Data

The process_row function applies get_sim to compute several similarity metrics for each row of content data. We calculate similarity scores between:

body_textandprimary_keywordbody_textandtopic_clustersprimary_keywordandtopic_clusters

def process_row(row):

body_text = row["body_text"]

primary_keyword = row["primary_keyword"]

topic_clusters = row["topic_clusters"]

bt_kw_sent_sim = get_sim(body_text, primary_keyword, "sent")

bt_kw_np_sim = get_sim(body_text, primary_keyword, "np")

bt_kw_ent_sim = get_sim(body_text, primary_keyword, "ent")

bt_tc_sent_sim = get_sim(body_text, topic_clusters, "sent")

bt_tc_np_sim = get_sim(body_text, topic_clusters, "np")

bt_tc_ent_sim = get_sim(body_text, topic_clusters, "ent")

tc_kw_sim = get_sim(primary_keyword, topic_clusters, "sent")

return (bt_kw_sent_sim, bt_kw_np_sim, bt_kw_ent_sim, bt_tc_sent_sim, bt_tc_np_sim, bt_tc_ent_sim, tc_kw_sim)

Step 5: Load Content Data from CSV

Load the content data from a CSV file with Pandas.

qr_article_body_content = pd.read_csv("blog_data.csv")

Step 6: Apply Multi-Processing for Efficiency

The script uses ProcessPoolExecutor to handle large datasets efficiently by processing rows in parallel.

with ProcessPoolExecutor() as executor:

results = list(executor.map(process_row, qr_article_body_content.to_dict('records')))

Step 7: Store and Organize Results

Store the results back in the original DataFrame for easy analysis and export.

qr_article_body_content["bt_kw_sent_sim"], qr_article_body_content["bt_kw_np_sim"], qr_article_body_content["bt_kw_ent_sim"], qr_article_body_content["bt_tc_sent_sim"], qr_article_body_content["bt_tc_np_sim"], qr_article_body_content["bt_tc_ent_sim"], qr_article_body_content["tc_kw_sim"] = zip(*results) # Optional: Drop the original body_text column to reduce data size qr_article_body_content.drop(columns=["body_text"], inplace=True)

Step 8: Review Results

Now, the DataFrame qr_article_body_content contains new columns for each similarity score, allowing easy analysis.

Example Output

After running the script, you will have a DataFrame that includes the following:

- bt_kw_sent_sim: Similarity between

body_textandprimary_keywordat the sentence level. - bt_kw_np_sim: Similarity based on noun phrases.

- bt_kw_ent_sim: Similarity based on named entities.

- bt_tc_sent_sim: Similarity between

body_textandtopic_clustersat the sentence level. - bt_tc_np_sim: Similarity based on noun phrases.

- bt_tc_ent_sim: Similarity based on named entities.

- tc_kw_sim: Sentence-level similarity between

primary_keywordandtopic_clusters.

Conclusion

This Python script provides a robust framework for analyzing content relevance and keyword alignment using NLP techniques. It’s a valuable tool for SEO experts and content analysts looking to improve content keyword coherence.

Feel free to expand the functionality by exploring different NLP features or refining similarity calculations to suit your SEO goals better.

Follow me at: https://www.linkedin.com/in/gregbernhardt/

- Evaluate Subreddit Posts in Bulk Using GPT4 Prompting - December 12, 2024

- Calculate Similarity Between Article Elements Using spaCy - November 13, 2024

- Audit URLs for SEO Using ahrefs Backlink API Data - November 11, 2024