Google Search Console is likely the most important source of data for an SEO. However, like most GUI platforms, it suffers from the same large downside. You’re stuck in a GUI that only gives you 16 months of data. You can manually export data to a Google Sheet. Exporting to a Google Sheet is fine, but what if you need to export every day.

What if you need integration with other tools. This becomes a large problem unless spending lots of money on third-party integrations. What if there was a dead-simple way to move GSC data via API into a storage warehouse like BigQuery? You’re in luck! There is!

In this tutorial, I’ll show you how to tap into Google Search Console via API, grab your performance data by date, and then permanently stored it into BigQuery. Then you can use that data in any way that you can imagine! Lastly, I’ll send you to another tutorial that will allow you to automate this process, storing daily data and soon creating a data repository that will expand past the 16 month GSC GUI limit.

Table of Contents

Requirements and Assumptions

- Python 3 is installed and basic Python syntax understood

- Access to a Linux installation (I recommend Ubuntu) or Google Colab.

- Google Search Console date performance data exists for URL

- Google Search Console API and service account credentials (download JSON file)

- Very basic BigQuery experience. If none, watch this Measure School video on it.

Let’s first take a few seconds to address BigQuery and generally how to set up a new table ready to accept the GSC data.

BigQuery Setup

Google BigQuery is a data warehouse within Google Cloud Platform that feels like a relational database, but it is not. It’s able to store data independently within tables and you can query it via SQL, but that is about where the similarities end. The advantage of BigQuery is that its dead simple to set up. BigQuery is free for up to 10GB of data which turns out, is actually quite a bit of data!

- Create a new Google Could Platform project or enter in an existing project

- Enable BigQuery API and create service account credentials (download JSON file)

- Create a new data set in BigQuery

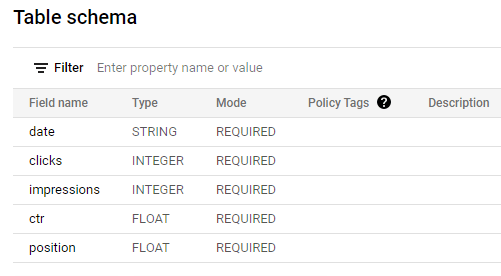

- Create a new table with the following schema (I set date as a string because I ran into some weird typing issues trying date or datetime type. Feel free to play with that to get it to work)

Install and Import Python Modules

- os: for setting the environment variable for BigQuery API

- bigquery: to communicate with the BigQuery API

- pandas: to accept the GSC data

- searchconsole: wrapper by Josh Carty to connect to the Google Search Console API. I would recommend reading the documentation for this dead simple wrapper.

Let’s start the script off by installing bigquery and searchconsole modules into your environment. If you are in a notebook remember to add an exclamation point before.

pip3 install searchconsole

pip3 install google-cloud-bigquery

Now let’s get to the script and import the above modules!

import os from google.cloud import bigquery import pandas as pd import searchconsole

The first thing we’ll want to do is set up the credentials for BigQuery which is easy using an environment variable. This should contain the path to the JSON credentials file you set up for the BigQuery API. Next, the site variable should contain the exact URL you want to grab GSC data for and should include the trailing backslash or it likely won’t work. Lastly, we set up the GSC API credentials. This config path should point to that secrets file you set up for Search Console API. The first time running this script it will trigger an OAuth process. The second time you run this script, it won’t be required by adding a “credentials=” parameter pointing to the credentials JSON file.

os.environ['GOOGLE_APPLICATION_CREDENTIALS'] = "" URL = "" account = searchconsole.authenticate(client_config='')

Now we send the API the authentication file and the URL.

webproperty = account[URL]

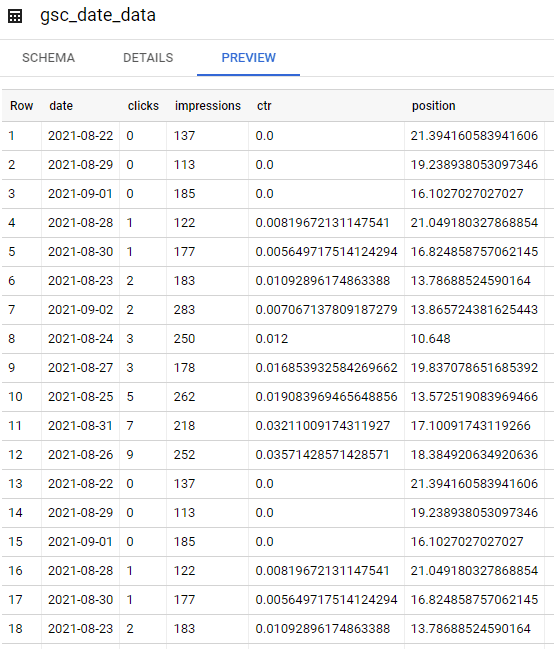

We next define the date range for which we want to be returned. In this script, we’re selecting the past 14 days from today’s date. The dimension we’re using is date, but you can use query or page, etc for other scripts. Then we run the API function and send the returning data straight into pandas dataframe. Easy!

report = webproperty.query.range('today', days=-14).dimension('date').get()

report_df = report.to_dataframe()

Now that we have our GSC data we can initiate the BigQuery API, define the destination of the data in table_id in the form of PROJECT.DATASET.TABLE (for example my destination for this script would be “rc-monitor-281402.gsc_test.gsc_date_data”)

client = bigquery.Client() table_id = ""

To be sure our data typing is as expected from GSC, let’s verify the data by setting a schema equal to the schema we laid out in BigQuery before we technically input it to the table. Notice we label the columns, then set the type and signal they are required fields.

job_config = bigquery.LoadJobConfig(

schema=[

bigquery.SchemaField("date", bigquery.enums.SqlTypeNames.STRING, mode="REQUIRED"),

bigquery.SchemaField("clicks", bigquery.enums.SqlTypeNames.INTEGER, mode="REQUIRED"),

bigquery.SchemaField("impressions", bigquery.enums.SqlTypeNames.INTEGER, mode="REQUIRED"),

bigquery.SchemaField("ctr", bigquery.enums.SqlTypeNames.FLOAT, mode="REQUIRED"),

bigquery.SchemaField("position", bigquery.enums.SqlTypeNames.FLOAT, mode="REQUIRED"),

]

)

Now all that is left is to send the GSC data, table id, and the schema config to the main BigQuery API function. Note, this function will by default append data to the table. Overwriting is possible within the documentation. Easy!

job = client.load_table_from_dataframe(

dataframe, table_id, job_config=job_config

)

job.result()

An optional last step for logging and debugging is to get a printout of the action and make sure it looks as expected.

table = client.get_table(table_id)

print(

"Loaded {} rows and {} columns to {}".format(

table.num_rows, len(table.schema), table_id

)

)

It will look something like this:

![]()

Lastly, if you want to automate this process to grab every day click the link below (you’ll want to change how many days’ worth of data you grab to -1 before you decide to automate or you’ll get duplicate data). This process is achieved with some fairly easy setup of Google Cloud Functions and Google Scheduler. You now also need a way to query the data. If you are unfamiliar with SQL, now is the time to start learning some SQL basics here.

Output and Conclusion

Python BigQuery FAQ

How can Python be employed to create a Google Search Console (GSC) to BigQuery pipeline?

Python scripts can be crafted to extract data from GSC and load it into BigQuery, establishing a pipeline for seamless data transfer and analysis.

Which Python libraries are commonly used for creating a GSC to BigQuery pipeline?

Python libraries such as google-auth, google-api-python-client for Google API interactions, and pandas for data manipulation are commonly employed for building this pipeline.

What specific steps are involved in using Python to create a GSC to BigQuery pipeline?

The process includes setting up authentication, fetching GSC data using the GSC API, transforming the data, and loading it into BigQuery tables using Python scripts.

Are there any considerations or limitations when using Python for this pipeline creation?

Consider the authentication setup, data transformation requirements, and potential variations in API responses. Regular monitoring and maintenance are essential for a robust pipeline.

Where can I find examples and documentation for creating a GSC to BigQuery pipeline with Python?

Explore online tutorials, documentation for relevant Python libraries, and resources specific to Google Search Console API and BigQuery for practical examples and detailed guides on building a GSC to BigQuery pipeline using Python.

- Storing CrUX CWV Data for URLs Using Python for SEOs - January 20, 2024

- Scraping YouTube Video Page Metadata with Python for SEO - January 4, 2024

- Calculate SERP Rank Readability Scores Using Python - August 20, 2023